VLA CASA Imaging-CASA4.5.2: Difference between revisions

| Line 121: | Line 121: | ||

If your science goal is to image a source, or field of view that is significantly larger than the FWHM of the VLA primary beam, then creating a mosaic from a number of pointings is usually the preferred method. For a tutorial on mosaicing, see the [https://casaguides.nrao.edu/index.php?title=EVLA_Continuum_Tutorial_3C391 3C391 tutorial]. In the following, however, we will discuss methods to image large maps from single pointing data. | If your science goal is to image a source, or field of view that is significantly larger than the FWHM of the VLA primary beam, then creating a mosaic from a number of pointings is usually the preferred method. For a tutorial on mosaicing, see the [https://casaguides.nrao.edu/index.php?title=EVLA_Continuum_Tutorial_3C391 3C391 tutorial]. In the following, however, we will discuss methods to image large maps from single pointing data. | ||

Since our observation was taken in D-configuration and we can check the [https://science.nrao.edu/facilities/vla/docs/manuals/oss/performance/resolution Observational Status Summary]'s section on VLA resolution to find that the synthesized beam will be around 46 arcsec. The minimum number of pixels to describe the beam is 2.4 (Nyquist | Since our observation was taken in D-configuration and we can check the [https://science.nrao.edu/facilities/vla/docs/manuals/oss/performance/resolution Observational Status Summary]'s section on VLA resolution to find that the synthesized beam will be around 46 arcsec. The minimum number of pixels to describe the beam is 2.4 (Nyquist field has the added advantage to access an additional field of view, but correction will have to be made, e.g. to correct for the non-coplanarity of the skycriterion), but it is generally advised to somewhat oversample the synthesized beam. Here, we will oversample by a factor of 2 and use 5 pixels across the synthesized beam, which results in a cell (pixel) size of 8arcsec. | ||

Our field contains bright point sources significantly outside the primary beam. | Our field contains bright point sources significantly outside the primary beam. The VLA (in particular when using multi-frequency synthesis, see below) will have significant sensitivity outside the main lobe of the primary beam. Sources that are located outside the primary beam may still be bright enough to create PSF sidelobes that reach into the main target field, in particular at the lower VLA frequencies. Such sources need to be cleaned to remove the PSF artifacts across the entire image. This can be done either by creating a very large image, or by using outlier fields centered on the strongest sources (see section on outlier fields below). A large image has the added advantage of increasing the field of view for science (albeit at lower sensitivity). But other effects will start to become significant like the non-coplanarity of the sky. Large image sizes will also slow down the deconvolution process. | ||

We create images that are 170 arcminutes on a side ([Image Size * Cell Size]*[1arcmin / 60arsec]), or almost 6x the size of the primary beam, catching the first and second sidelobes. This is ideal for showcasing both the problems inherent in such wide-band, wide-field imaging, as well as some of the solutions currently available in CASA to deal with these issues. Note that the execution time of {{clean}} depends on the image sizes. Large images generally take more computing time. There are some values, however, that are computationally not advisable. The logger output will then show a recommendation for the next larger but faster image size. As a rule of thumb we recommend image sizes <math>2^n*10</math>, e.g. 160, 1280, etc. | |||

<math> | |||

</math> | |||

== Clean Output Images == | == Clean Output Images == | ||

Revision as of 16:57, 22 April 2016

Imaging

This tutorial provides guidance on imaging procedures in CASA.

We will be utilizing data taken with the Karl G. Jansky Very Large Array, of a supernova remnant G055.7+3.4.. The data were taken on August 23, 2010, in the first D-configuration for which the new wide-band capabilities of the WIDAR (Wideband Interferometric Digital ARchitecture) correlator were available. The 8-hour-long observation includes all available 1 GHz of bandwidth in L-band, from 1-2 GHz in frequency.

We will skip the calibration process in this guide, as examples of calibration can be found in several other guides, including the EVLA Continuum Tutorial 3C391 and EVLA high frequency Spectral Line tutorial - IRC+10216 guides.

A copy of the calibrated data (1.2GB) can be downloaded from http://casa.nrao.edu/Data/EVLA/SNRG55/SNR_G55_10s.calib.tar.gz

Your first step will be to unzip and untar the file in a terminal (before you start CASA):

tar -xzvf SNR_G55_10s.calib.tar.gz

Then start casa as usual via the casa command, which will bring up the ipython interface and launches the logger.

The CLEAN Algorithm

The CLEAN algorithm, developed by J. Högbom (1974) enabled the synthesis of complex objects, even if they have relatively poor Fourier uv-plane coverage. Poor coverage occurs with partial earth rotation synthesis, or with arrays composed of few antennas. The "dirty" image is formed by a simple Fourier inversion of the sampled visibility data, with each point on the sky being represented by a suitably scaled and centered PSF (Point Spread Function, or dirty beam, which is the Fourier inversion of the visibility (u,v) coverage). This algorithm attempts to interpolate from the measured (u,v) points across gaps in the (u,v) coverage. It, in short, provides solutions to the convolution equation by representing radio sources by a number of point sources in an empty field. The brightest points are found by performing a cross-correlation between the dirty image, and the PSF. The brightest parts are subtracted, and the process is repeated again for the next brighter sources. Variants of CLEAN, such as multi-scale CLEAN, take into account extended kernels which may be better suited for extended objects.

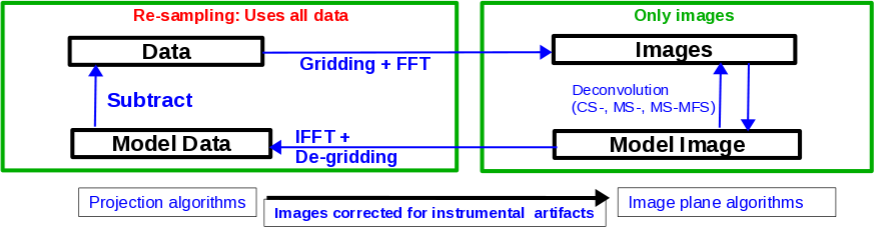

For single pointings, CASA uses the Cotton-Schwab cleaning algorithm in the task clean (imagermode='csclean'), which breaks the process into major and minor cycles (see Fig. 1). To start with, the visibilities are gridded, weighted, and Fourier transformed to create a (dirty) image. The minor cycle then operates in the image domain to find the clean components that are added to the clean model. The image is Fourier transformed back to the visibility domain, degridded, and the clean model is subtracted. This creates a new residual that is then gridded, weighted, and FFT'ed again to the image domain for the next iteration. The gridding, FFT, degridding, and subtraction processes form the major cycle.

This iterative process is continued until a stopping criterion is reached, such as a maximum number of clean components, or a flux threshold in the residual image.

In CASA clean, two versions of the PSF can be used (parameter psfmode): hogbom uses the full sized PSF for subtraction. This is a thorough but slow method. psfmode='clark' uses a smaller beam patch, which increases the speed. The patch size and length of the minor cycle are internally chosen such that clean converges well without giving up the speed improvement. It is thus the default option in clean.

In a final step, clean derives a Gaussian fit to the inner part of the PSF, which defines the clean beam. The clean model is then convolved with the clean beam and added to the last residual image to create the final image.

Note that the CASA team currently develops a refactored clean task, called tclean. It has a better interface and provides new algorithms as well as more combinations between imaging algorithms. tclean also includes software to parallelize the computations in a multi-processor environment. Eventually, tclean will replace the current clean task. For this guide, however, we will stick with the original clean task, as tclean is still in the development and testing phase. Nevertheless, the reader is encouraged to try tclean and send us feedback through the NRAO helpdesk.

For more details on imaging and deconvolution, we refer to the Astronomical Society of the Pacific Conference Series book entitled Synthesis Imaging in Radio Astronomy II. The chapter on Deconvolution may prove helpful. In addition, imaging presentations are available on the Synthesis Imaging Workshop and VLA Data Reduction Workshop webpages. The CASA cookbook chapter on Synthesis Imaging provides a wealth of information on the CASA implementation of clean and related tasks.

Finally, we like to refer to the VLA Observational Status Summary and the Guide to Observing with the VLA for information on the VLA capabilities and observing strategies.

Weights and Tapering

When imaging data, a map is created associating the visibilities with the image. The sampling function, which is a function of the visibilities, is modified by a weight function that defines the shape and size of the PSF. Weighting therefore provides some control over the spatial resolution and the surface brightness sensitivity of the map, where either direction can be emphasized.

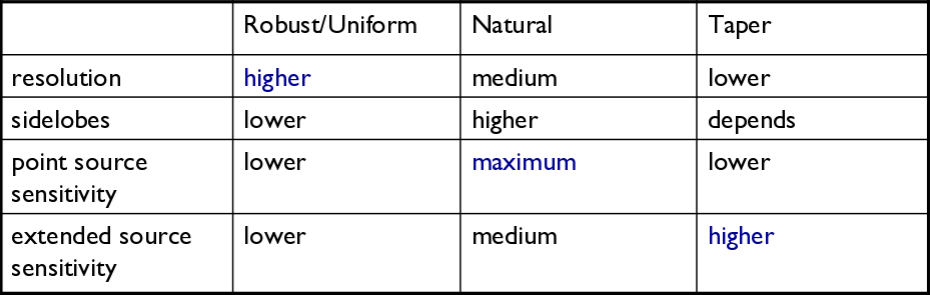

There are three main weighting schemes (see also Table 1):

1) Natural weighting: The natural weights data based on their rms only. All visibility weights in a cell are being summed. More visibilities in a cell will thus increase the cell's weight, which will emphasize the shorter baselines. Natural weighting therefore results in a larger PSF, better surface brightness sensitivity but also a degraded resolution.

2) Uniform weighting: The weights are first gridded as in natural weighting but then each cell is corrected such that the weights are independent of the number of visibilities inside. Compared to natural weighting, uniform weighting emphasizes the longer baselines. Consequently, the PSF is smaller, which results in a better spatial resolution of the image. At the same time, however, the surface brightness sensitivity is reduced compared to natural weighting. The 'uniform' weighting of the baselines is a better representation of the uv-coverage and sidelobes are more suppressed.

3) Briggs weighting: This scheme provides a compromise between natural and uniform weighting. The transition can be controlled with the robust parameter where robust=-2 is close to uniform and robust=2 close to natural weighting. Briggs weighting therefore offers a compromise for between spatial resolution and surface brightness sensitivity, and robust values near zero are typically being used.

Tapering: In conjunction with the above weighting schemes, one can specify the uvtaper parameter within clean, which will control the radial weighting of visibilities, in the uv-plane. This in effect, reduces the visibilities, with weights decreasing as a function of uv-radius. The taper in clean is an elliptical Gaussian function. Tapering can smooth the image plane, give more weight to short baselines, but in turn degrade angular resolution. This process will increase the surface brightness sensitivity of the data. Aggressive tapering, however, can downweight some of the antennas and baselines to a degree where they are essentially not being considered anymore. The point source sensitivity will thus be decreased and in extreme cases the surface brightness sensitivity will suffer, too.

We refer to the CASA Cookbook Synthesis Imaging chapter for the details of the weighting implementation in CASA's clean.

Primary and Synthesized Beam

The primary beam of a single antenna defines the sensitivity across the field of view. For the VLA antennas, the main beam can be approximated by a Gaussian with a FWHM equal to [math]\displaystyle{ 90*\lambda_{cm} }[/math] or [math]\displaystyle{ 45/ \nu_{GHz} }[/math]. But note that there are sidelobes beyond the Gaussian kernel that are sensitive to bright sources (see below). Taking our observed frequency to be the middle of the band, 1.5GHz, our primary beam will be around 30 arcmin.

If your science goal is to image a source, or field of view that is significantly larger than the FWHM of the VLA primary beam, then creating a mosaic from a number of pointings is usually the preferred method. For a tutorial on mosaicing, see the 3C391 tutorial. In the following, however, we will discuss methods to image large maps from single pointing data.

Since our observation was taken in D-configuration and we can check the Observational Status Summary's section on VLA resolution to find that the synthesized beam will be around 46 arcsec. The minimum number of pixels to describe the beam is 2.4 (Nyquist field has the added advantage to access an additional field of view, but correction will have to be made, e.g. to correct for the non-coplanarity of the skycriterion), but it is generally advised to somewhat oversample the synthesized beam. Here, we will oversample by a factor of 2 and use 5 pixels across the synthesized beam, which results in a cell (pixel) size of 8arcsec.

Our field contains bright point sources significantly outside the primary beam. The VLA (in particular when using multi-frequency synthesis, see below) will have significant sensitivity outside the main lobe of the primary beam. Sources that are located outside the primary beam may still be bright enough to create PSF sidelobes that reach into the main target field, in particular at the lower VLA frequencies. Such sources need to be cleaned to remove the PSF artifacts across the entire image. This can be done either by creating a very large image, or by using outlier fields centered on the strongest sources (see section on outlier fields below). A large image has the added advantage of increasing the field of view for science (albeit at lower sensitivity). But other effects will start to become significant like the non-coplanarity of the sky. Large image sizes will also slow down the deconvolution process.

We create images that are 170 arcminutes on a side ([Image Size * Cell Size]*[1arcmin / 60arsec]), or almost 6x the size of the primary beam, catching the first and second sidelobes. This is ideal for showcasing both the problems inherent in such wide-band, wide-field imaging, as well as some of the solutions currently available in CASA to deal with these issues. Note that the execution time of clean depends on the image sizes. Large images generally take more computing time. There are some values, however, that are computationally not advisable. The logger output will then show a recommendation for the next larger but faster image size. As a rule of thumb we recommend image sizes [math]\displaystyle{ 2^n*10 }[/math], e.g. 160, 1280, etc.

Clean Output Images

As a result of the CLEAN algorithm, clean will create a number of output images. For an imagename='<imagename>', this would be:

<imagename>.image the residual + the model convolved with the clean beam. This is the final image (unit: Jy/clean beam).

<imagename>.residual the residual after subtracting the clean model (unit: Jy/dirty beam).

<imagename>.model the clean model, not convolved (unit: Jy/pixel).

<imagename>.psf the point-spread function aka dirty beam

<imagename>.flux the normalized sensitivity map. For single pointings this corresponds to the primary beam.

Additional images will be created for specific algorithms like multi-term frequency synthesis or mosaicking.

Note: If an image file is present in the working directory and the same name is provided in imagename, clean will use that image (in particular the residual and model image) as a starting point for further cleaning. If you want a fresh run of clean, first remove all images of that name using 'rmtables()':

# In CASA

rmtables('<imagename>.*')

This method is preferable over 'rm -rf' as it also clears the cache.

Note that interrupting clean by Ctrl+C may corrupt your visibilities - you may be better off choosing to let clean finish. We are currently implementing a command that will nicely exit to prevent this from happening, but for the moment try to avoid Ctrl+C.

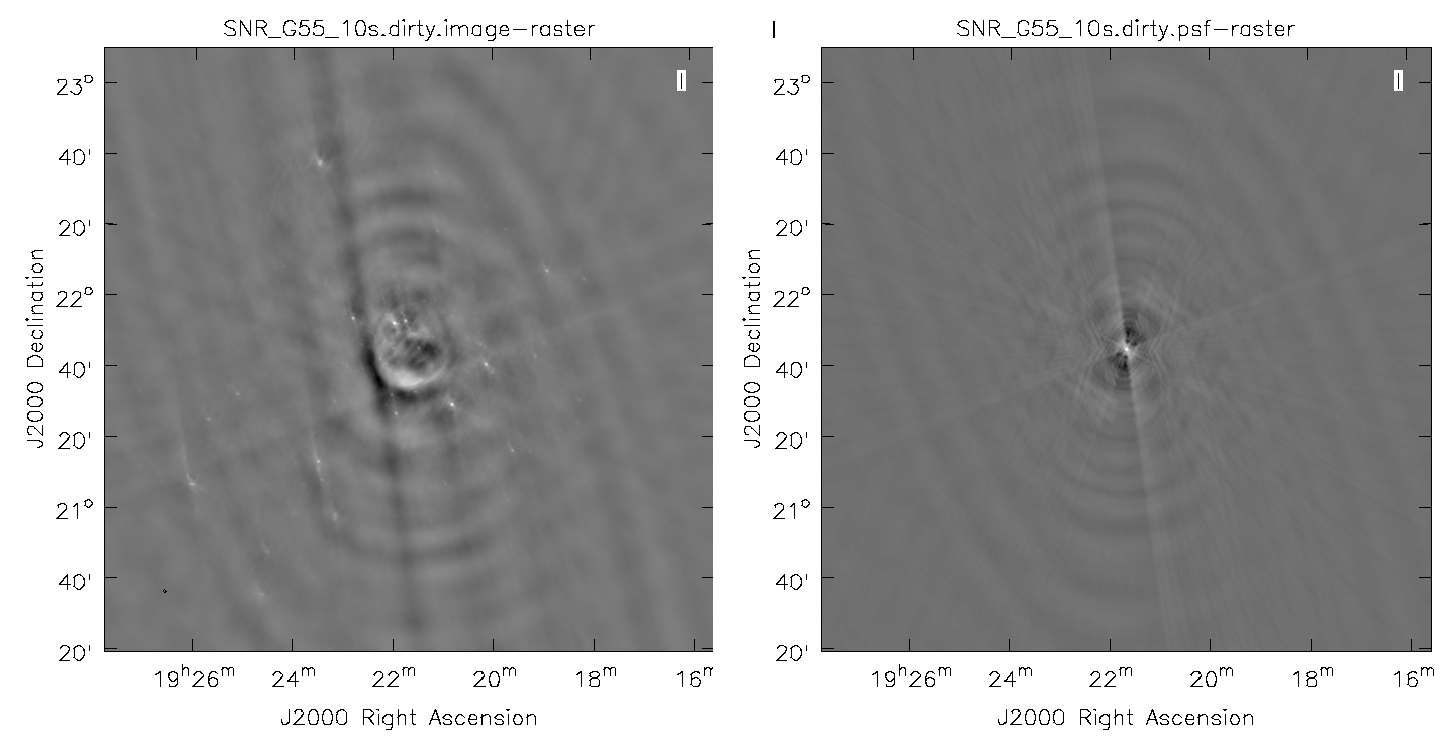

Dirty Image

First, we will create a dirty image (Fig. 3) to see the improvements as we step through several cleaning algorithms and parameters. The dirty image is the true image on the sky, convolved with the dirty beam (PSF). We will do this by running clean with niter=0, which will not perform any minor cycles.

# In CASA

clean(vis='SNR_G55_10s.calib.ms', imagename='SNR_G55_10s.dirty',

imsize=1280, cell='8arcsec', interactive=False, niter=0,

stokes='I', usescratch=F)

viewer('SNR_G55_10s.dirty.image')

- imagermode='csclean': use the Cotton-Schwab clean algorithm

- imagename='SNR_G55_10s.dirty': the root filename used for the various clean outputs.

- imsize=1280: the image size in number of pixels. A single value will result in a square image.

- cell='8arcsec': the size of one pixel; again, entering a single value will result in a square pixel size.

- niter=0: this controls the number of iterations clean will do in the minor cycle. Since this is a dirty image, we set it to 0.

- interactive=False: we will let clean use the entire field for placing model components. Alternatively, you could try using interactive=True, and create regions to constrain where components will be placed. However, this is a very complex field, and creating a region for every bit of diffuse emission as well as each point source can quickly become tedious. For a tutorial that covers more of an interactive clean, please see IRC+10216 tutorial.

- usescratch=F: do not write the model visibilities to the model data column (only needed for self-calibration)

- stokes='I': since we have not done any polarization calibration, we only create a total-intensity image. For using CLEAN while including various Stoke's Parameters, please see the 3C391 CASA guide.

Note that the clean beam is only defined after some clean iterations. The dirty image has therefore no beam size specified in the header and the PSF image is the representation of the response of the array to a point source.

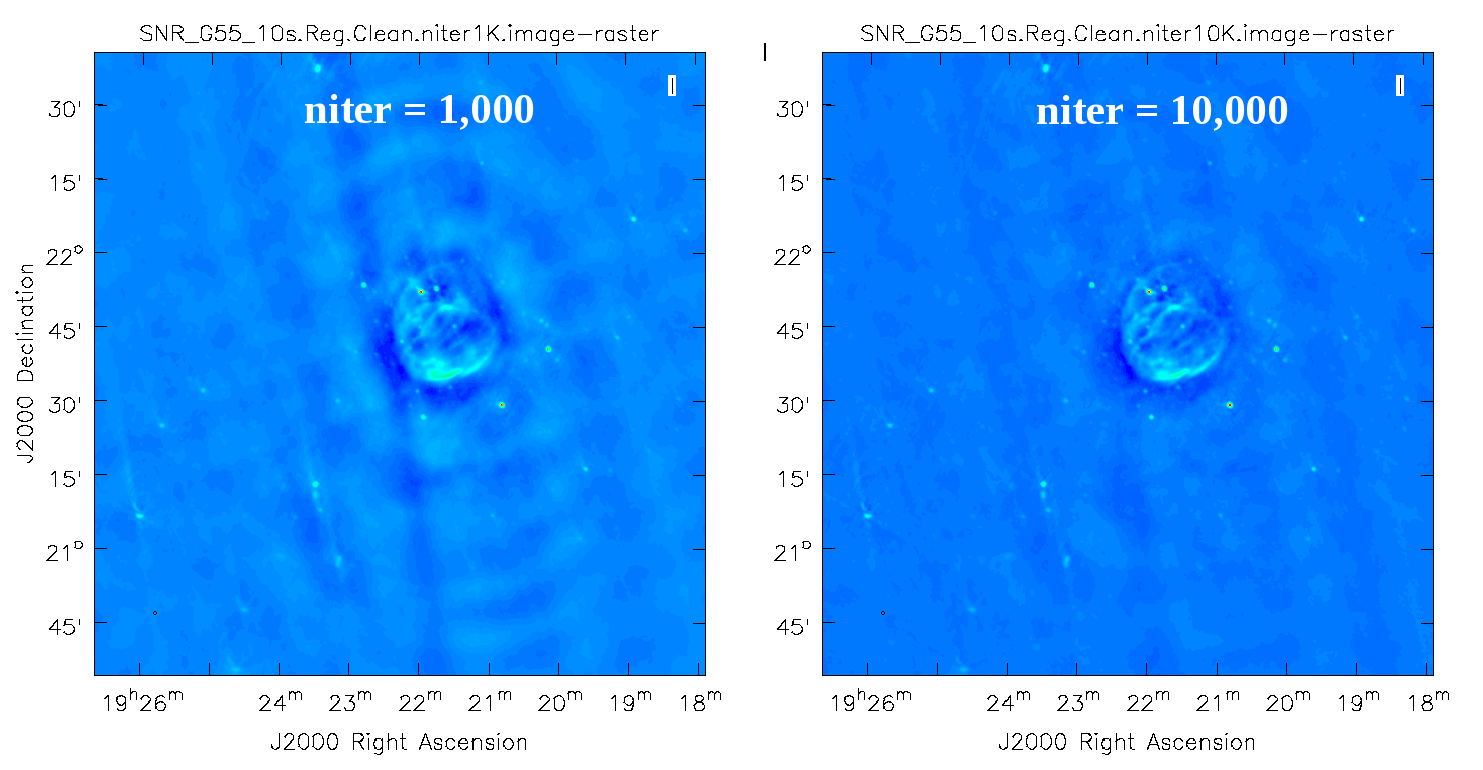

Regular CLEAN & RMS Noise

Now, we will create a regular clean image which uses mostly default values to see how deconvolution improves the image quality. The first run of clean will use a fixed number of minor cycle iterations ofniter=1000 (default is 500), the second will have niter=10000. Note that you may have to play with the image color map and brightness/contrast to get a better view of the image details.

# In CASA. Create default clean image.

clean(vis='SNR_G55_10s.calib.ms', imagename='SNR_G55_10s.Reg.Clean.niter1K',

imsize=1280, cell='8arcsec', niter=1000, interactive=False)

viewer('SNR_G55_10s.Reg.Clean.niter1K.image')

The logger indicates that the image was obtained in two major cycles and some improvements over the dirty image are visible. But clearly we have not cleaned deep enough yet; the image still has many sidelobes, and an inspection of the residual image shows that it still contains source flux and structure. So let's increase the niter value to 10,000 and compare the images.

# In CASA. Create default clean image with niter = 10000

clean(vis='SNR_G55_10s.calib.ms', imagename='SNR_G55_10s.Reg.Clean.niter10K',

imsize=1280, cell='8arcsec', niter=10000, interactive=False)

viewer('SNR_G55_10s.Reg.Clean.niter10K.image')

As we can see from the resulting images, increasing the niter values (minor cycles) improves our image by reducing prominent sidelobes significantly. One could now further increase the niter parameter until the residuals are down to an acceptable level. To determine the number of iterations, one needs to keep in mind that clean will fail to converge once it starts cleaning too deeply into the noise. At that point, the cleaned flux and the peak residual flux values will start to oscillate as the number of iterations increase. This effect can be monitored on the CASA logger output. To avoid cleaning too deeply, we will set a threshold parameter that will stop minor cycle clean iterations once a peak residual value is being reached.

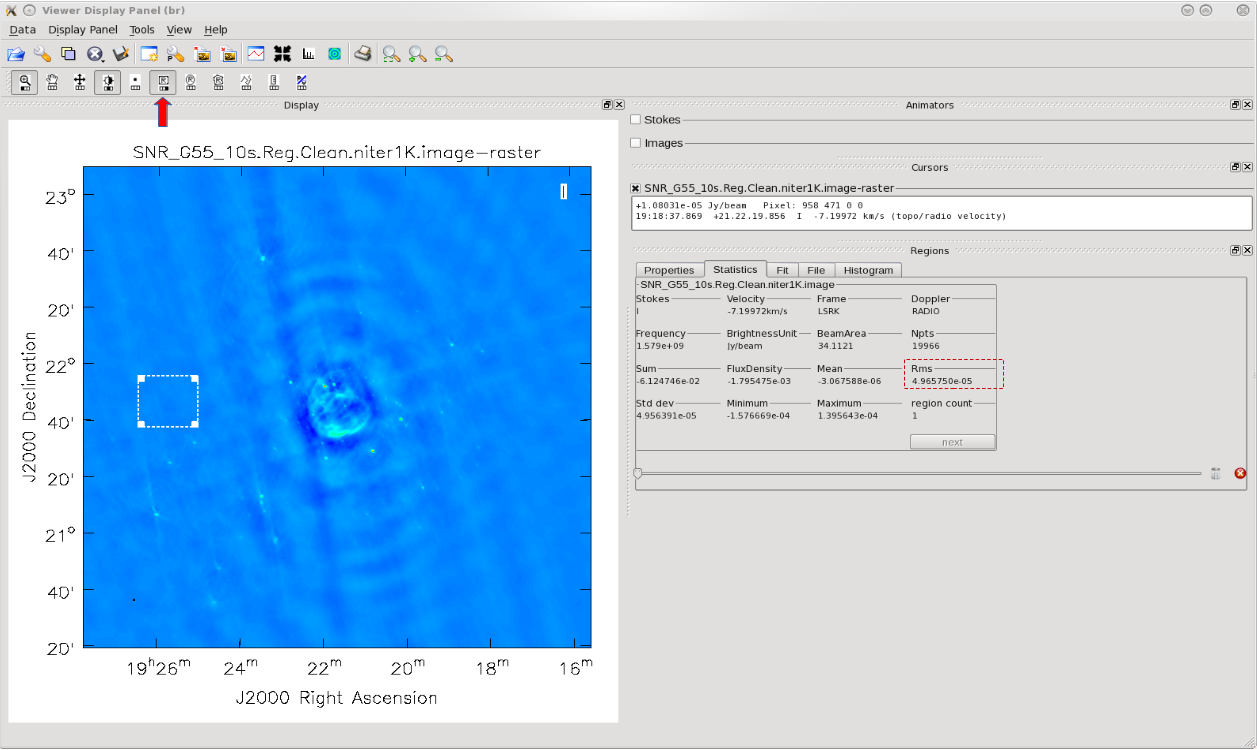

First, will utilize the SNR_G55_10s.Reg.Clean.niter1K.image image to give us an idea of the rms noise (your sigma value). With the image open within the viewer, click on the 'Rectangle Drawing' button (Rectangle with R) and draw a square on the image at a position with little source or sidelobe contamination. Doing this should open up a "Regions" dock, which holds information about the region, including the selected pixel statistics in the aptly named "Statistics" tab. Take notice of the rms values as you click/drag the box around empty image locations, or by drawing additional boxes at suitable positions.

The lowest rms value that we found was about 4E-5 Jy/beam, which we will use to calculate our threshold. There really is no set standard, but fairly good threshold values can vary anywhere between 2.0-4.0*sigma; using clean boxes (see the section on interactive cleaning) allows one to go to lower thresholds. For our purposes, we will choose a threshold of 2.5*sigma. Doing the math results in a value of 10E-5 or equivalently 0.10mJy/beam. Therefore, for future calls to the clean task, we will set threshold='0.1mJy'. The clean cycle will be stopped when the residual peak flux equals or is less than the threshold value, or when the maximum number of iterations niter is reached. To ensure that the stopping criterion is indeed threshold, niter should be set to a very high number. In the following, we nevertheless will use niter=1000 to keep the execution times of clean on the low end as we focus on explaining different imaging methods.

An alternative method to determine the approximate rms of an image is to use the VLA Exposure Calculator and to enter the observing conditions.

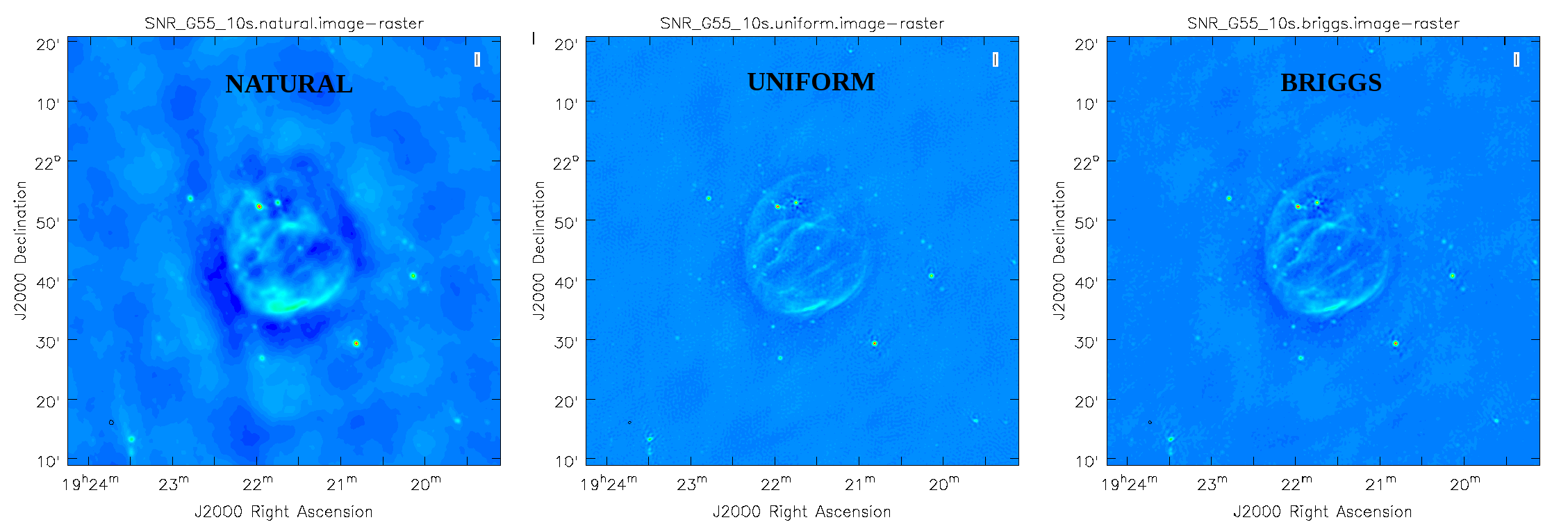

CLEAN with Weights

To see the effects of using different weighting schemes to the image, let's change the weighting parameter within clean and inspect the resulting images. We will be using the Natural, Uniform, and Briggs weighting algorithms. Here, we have chosen a smaller image size to mainly focus on our science target.

# In CASA. Natural weighting

clean(vis='SNR_G55_10s.calib.ms', imagename='SNR_G55_10s.natural', weighting='natural',

imsize=540, cell='8arcsec', niter=1000, interactive=False, threshold='0.1mJy')

# In CASA. Uniform weighting

clean(vis='SNR_G55_10s.calib.ms', imagename='SNR_G55_10s.uniform', weighting='uniform',

imsize=540, cell='8arcsec', niter=1000, interactive=False, threshold='0.1mJy')

# In CASA. Briggs weighting, with robust set to default of 0.0

clean(vis='SNR_G55_10s.calib.ms', imagename='SNR_G55_10s.briggs', weighting='briggs',

robust=0, imsize=540, cell='8arcsec', niter=1000, interactive=False, threshold='0.1mJy')

viewer()

- weighting: specification of the weighting scheme. For Briggs weighting, the robust parameter will be used.

- threshold='0.1mJy': threshold at which the cleaning process will halt.

In Fig. 6 we see that the natural weighted image is most sensitive to extended emission (beam size of 46"x41"). The negative values around the extended emission (often referred to as a negative 'bowl') is a typical signature of missing short spacings, extended emission that even the minimum baseline is not able to detect. Uniform weighted data shows the highest resolution (26"x25") and Briggs 'robust=0' (default value) is a compromise with a beam of 29"x29". To be more sensitive to the extended emission, the 'robust' parameter could be tweaked further toward more positive values.

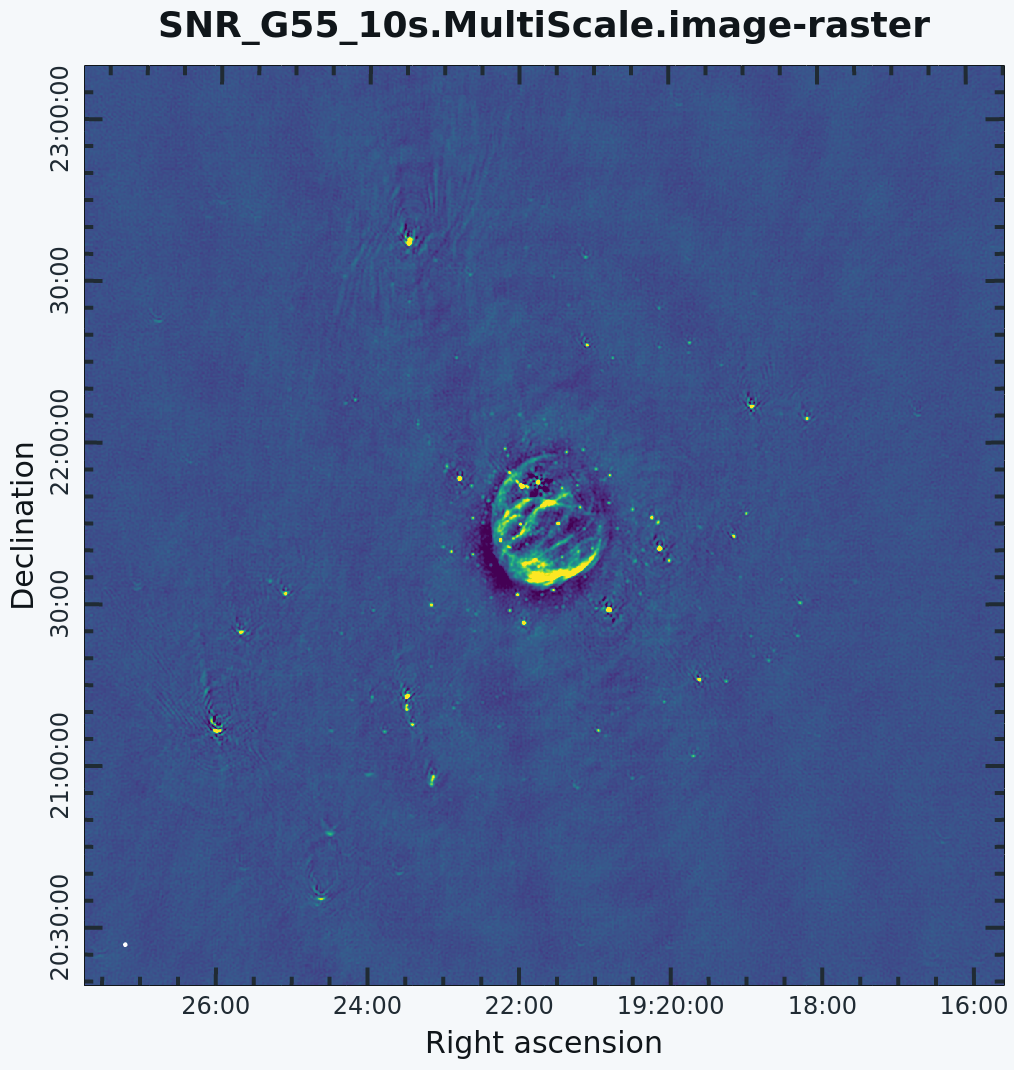

Multi-Scale CLEAN

Since G55.7+3.4 is an extended source with many spatial scales, a more advanced form of imaging involves the use of multiple scales. MS-CLEAN is an extension of the classical CLEAN algorithm for handling extended sources. It works by assuming the sky is composed of emission at different spatial scales and works on them simultaneously, thereby creating a linear combination of images at different spatials scales. For a more detailed description of Multi Scale CLEAN, see the paper by J.T. Cornwell entitled Multi-Scale CLEAN deconvolution of radio synthesis images.

We will use a set of scales (which are expressed in units of the requested pixel, or cell, size) which are representative of the scales that are present in the data, including a zero-scale for point sources.

# In CASA

clean(vis='SNR_G55_10s.calib.ms', imagename='SNR_G55_10s.MultiScale',

imsize=1280, cell='8arcsec', multiscale=[0,6,10,30,60], smallscalebias=0.9,

interactive=False, niter=1000, weighting='briggs', stokes='I',

threshold='0.1mJy', usescratch=F, imagermode='csclean')

viewer('SNR_G55_10s.MultiScale.image')

- multiscale=[0,6,10,30,60]: a set of scales on which to clean. A good rule of thumb when using multiscale is 0, 2xbeam, 5xbeam (where beam is the synthesized beam), and larger scales up to about half the minor axis maximum scale of the mapped structure. Since these are in units of the pixel size, our chosen values will be multiplied by the requested cell size. Thus, we are requesting scales of 0 (a point source), 48, 80, 240, and 480 arcseconds (8 arcminutes). Note that 16 arcminutes (960 arcseconds) roughly corresponds to the size of G55.7+3.4.

- smallscalebias=0.9: This parameter is known as the small scale bias, and helps with faint extended structure, by balancing the weight given to smaller structures which tend to be brighter, but have less flux density. Increasing this value gives more weight to smaller scales. A value of 1.0 weighs the largest scale to zero, and a value of less than 0.2 weighs all scales nearly equally. The default value is 0.6.

The logger will show how much cleaning is performed on the individual scales.

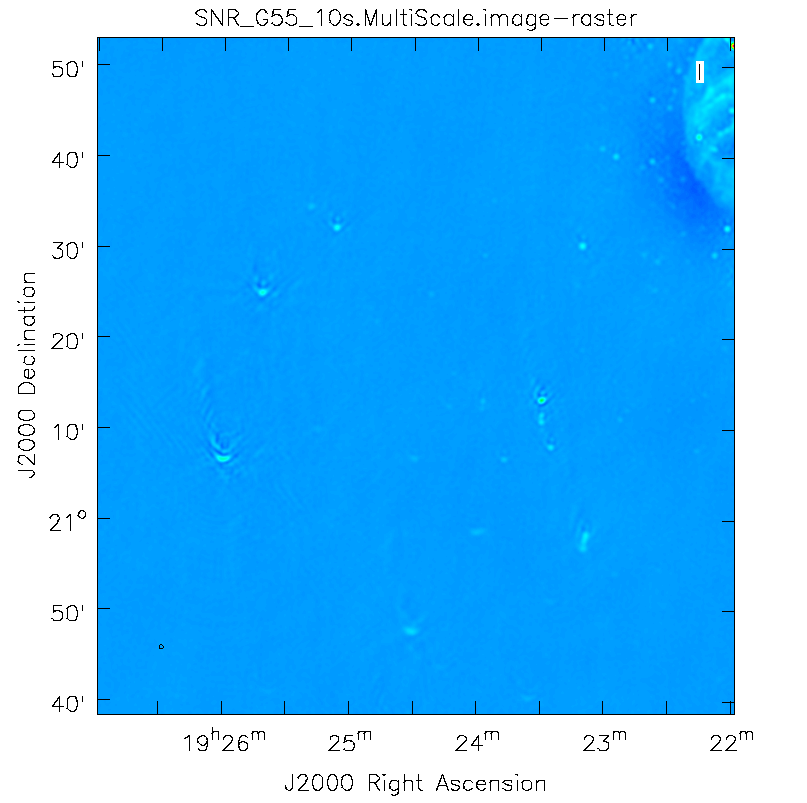

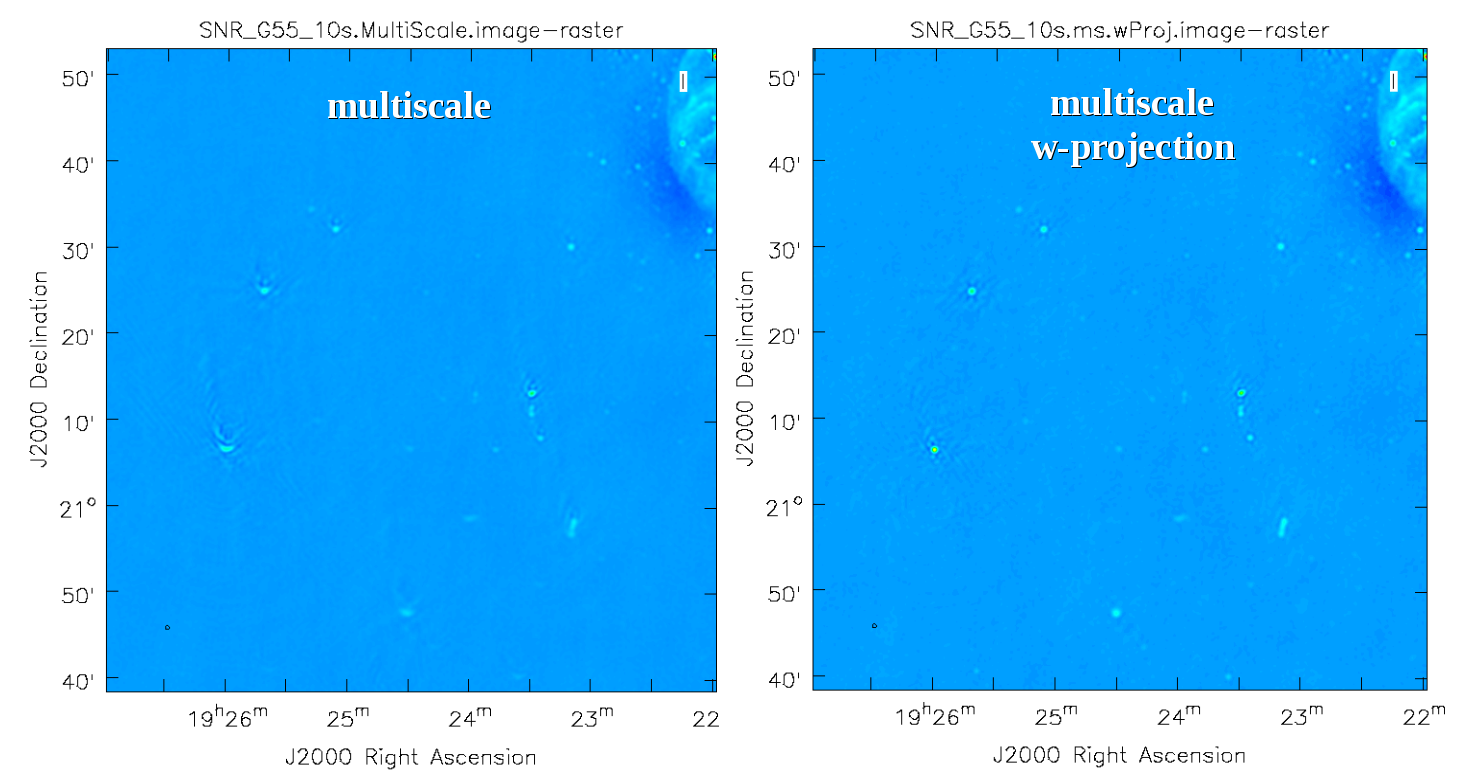

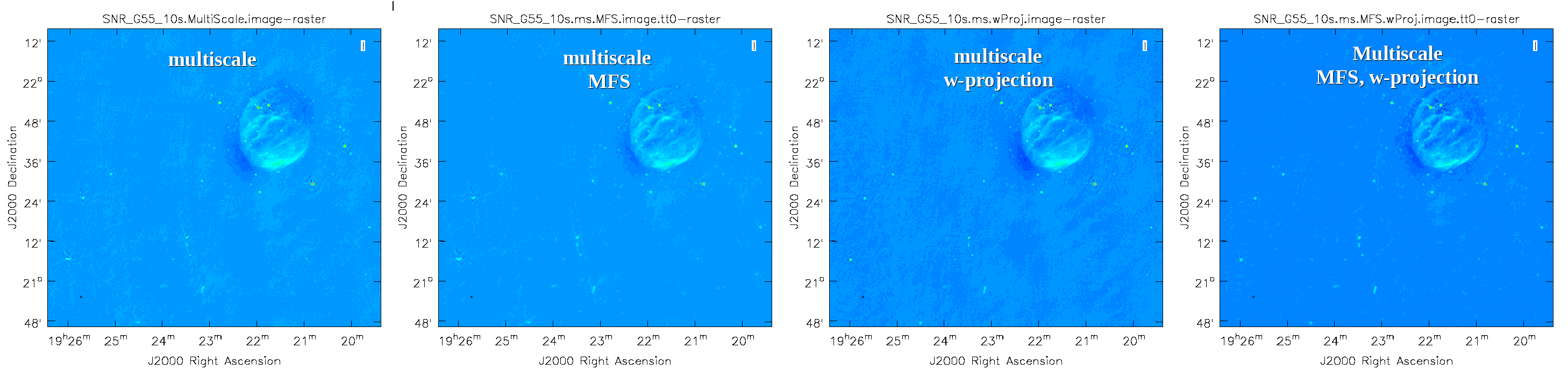

This is the fastest of the imaging techniques described here, but it's easy to see that there are artifacts in the resulting image (Fig. 7). We can use the viewer to explore the point sources near the edge of the field by zooming in on them (Fig. 8). Click on the "Zooming" button on the top left corner and highlight an area by making a square around the portion where you would like to zoom-in. Double clicking within the square will zoom-in to the selected area. The square can be resized by clicking/dragging the corners, or removed by pressing the "Esc" key. After zooming in on the area, we can see some radio sources have prominent arcs, as well as spots with a six-pointed pattern surrounding them.

Next we will explore some more advanced imaging techniques to mitigate the artifacts seen towards the edge of the image.

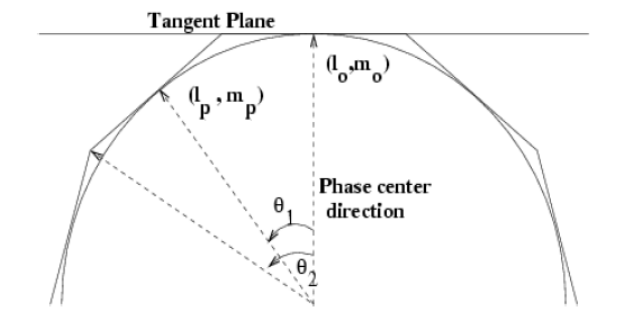

Multi-Scale, Wide-Field CLEAN (w-projection)

The next clean algorithm we will employ is w-projection, which is a wide-field imaging technique that takes into account the non-coplanarity of the baselines as a function of distance from the phase center (Fig. 9). For wide-field imaging, the sky curvature and non-coplanar baselines results in a non-zero w-term. The w-term introduced by the sky and array curvature introduces a phase term that will limit the dynamic range of the resulting image. Applying 2-D imaging to such data will result in artifacts around sources away from the phase center, as we saw in running MS-CLEAN. Note that this affects mostly the lower frequency bands, especially for the more extended configurations, due to the field of view decreasing with higher frequencies.

The w-term can be corrected by faceting (describe the sky curvature by many smaller planes) in either the image or uv-plane, or by employing w-projection. A combination of the two can also be employed within clean by setting the parameter gridmode='widefield'. If w-projection is employed, it will be done for each facet. Note that w-projections is an order of magnitude faster than the faceting algorithm, but will require more memory.

For more details on w-projection, as well as the algorithm itself, see "The Noncoplanar Baselines Effect in Radio Interferometry: The W-Projection Algorithm". Also, the chapter on Imaging with Non-Coplanar Arrays may be helpful.

# In CASA

clean(vis='SNR_G55_10s.calib.ms', imagename='SNR_G55_10s.ms.wProj',

gridmode='widefield', imsize=1280, cell='8arcsec',

wprojplanes=-1, multiscale=[0,6,10,30,60],

interactive=False, niter=1000, weighting='briggs',

stokes='I', threshold='0.1mJy', usescratch=F, imagermode='csclean')

viewer('SNR_G55_10s.ms.wProj.image')

- gridmode='widefield': Use the w-projection algorithm.

- wprojplanes=-1: The number of w-projection planes to use for deconvolution. Setting to -1 forces CLEAN to utilize an acceptable number of planes for the given data.

This will take slightly longer than the previous imaging round; however, the resulting image (Fig. 10) has noticeably fewer artifacts. In particular, compare the same outlier source in the Multi-Scale w-projected image with the Multi-Scale-only image: note that the swept-back arcs have disappeared. There are still some obvious imaging artifacts remaining, though.

Multi-Scale, Multi-Term Frequency Synthesis

A consequence of simultaneously imaging the wide fractional bandwidths available with the VLA is that the primary and synthesized beams have substantial frequency-dependent variation over the observing band. If this is not accounted for, it will lead to imaging artifacts and compromise the achievable image rms.

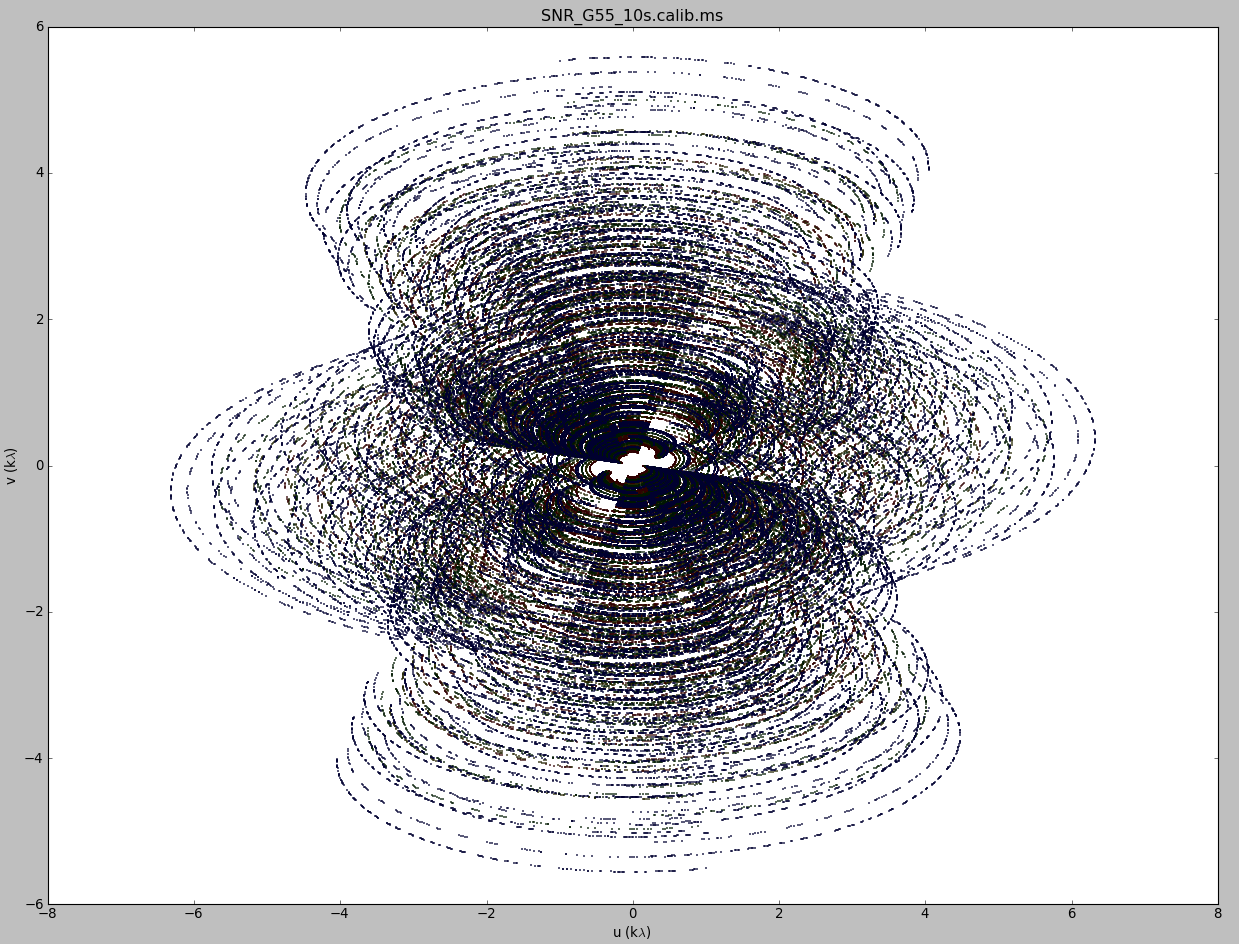

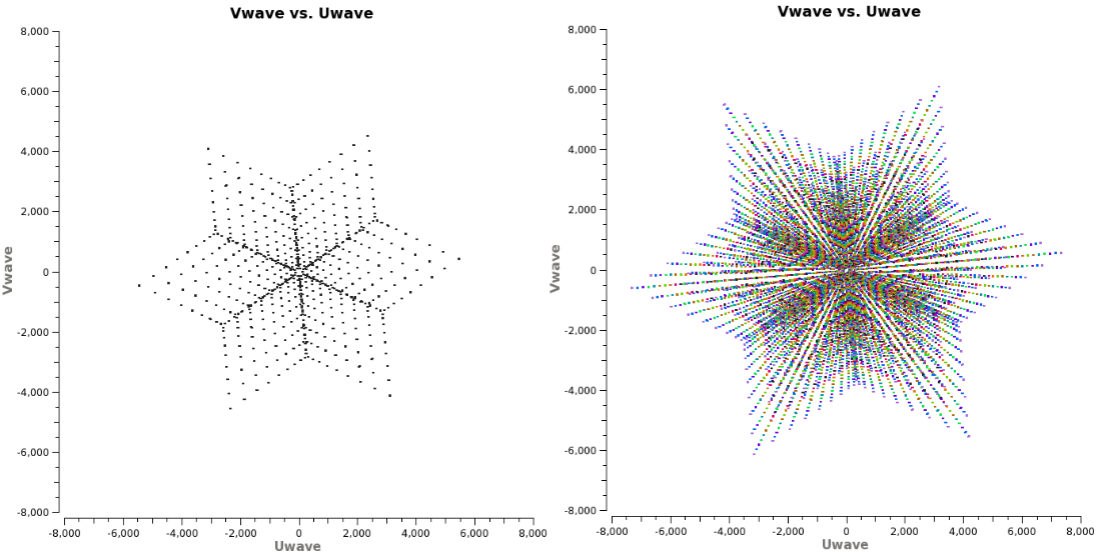

The dimensions of the (u,v) plane are measured in wavelengths, and therefore observing at several frequencies, a baseline can sample several ellipses in the (u,v) plane, each with different sizes. We can therefore fill in the gaps in the single frequency (u,v) coverage (Fig. 11) to achieve a much higher image fidelity. This method is called Multi-Frequency Synthesis (MFS). Also when observing in low-frequencies, it may prove beneficial to observe in small time-chunks, which are spread out in time. This will allow the coverage of more spatial-frequencies, allowing us to employ this algorithm more efficiently.

The Multi-Scale Multi-Frequency-Synthesis algorithm (also known ad Multi-Term Frequency Synthesis) provides the ability to simultaneously image and fit for the intrinsic source spectrum. The spectrum is approximated using a polynomial Taylor Term expansion in frequency, with the degree of the polynomial as a user-controlled parameter. A least-squares approach is used, along with the standard clean-type iterations.

For a more detailed explanation of the MS-MFS deconvolution algorithm, please see the paper by Urvashi Rau and Tim J. Cornwell entitled A multi-scale multi-frequency deconvolution algorithm for synthesis imaging in radio interferometry

# In CASA

clean(vis='SNR_G55_10s.calib.ms', imagename='SNR_G55_10s.ms.MFS',

imsize=1280, cell='8arcsec', mode='mfs', nterms=2,

multiscale=[0,6,10,30,60],

interactive=False, niter=1000, weighting='briggs',

stokes='I', threshold='0.1mJy', usescratch=F, imagermode='csclean')

viewer('SNR_G55_10s.ms.MFS.image.tt0')

viewer('SNR_G55_10s.ms.MFS.image.alpha')

- nterms=2: the number of Taylor terms to be used to model the frequency dependence of the sky emission. Note that the speed of the algorithm will depend on the value used here (more terms will be slower). nterms=2 will fit a spectral index, and nterms=3 a spectral index and curvature.

This will take much longer than the two previous methods, so it would probably be a good time to have coffee or chat about VLA data reduction with your neighbor at this point.

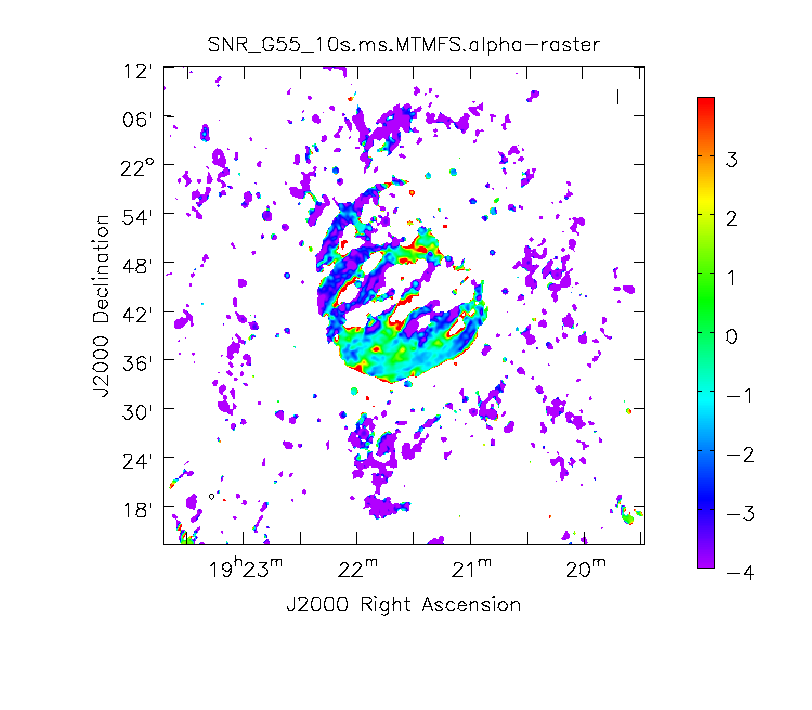

When clean is done <imagename>.image.tt0 will contain a total intensity image (Fig. 12), where tt* is a suffix to indicate the Taylor term; <imagename>.image.tt0 is the total intensity image and <imagename>.image.alpha will contain an image of the spectral index in regions where there is sufficient signal-to-noise (Fig. 13). Having this spectral index image can help convey information about the emission mechanism involved within the supernova remnant. It can also give information on the optical depth of the source.

For more information on the multi-term, multi-frequency synthesis mode and its outputs, see section 5.2.5.1 in the CASA cookbook.

Inspect the brighter point sources in the field near the supernova remnant. You will notice that some of the artifacts, which had been symmetric around the sources themselves, are now gone; however, since we did not use W-Projection this time, there are still strong features related to the non-coplanar baseline effects still apparent for sources further away.

At this point, clean takes into account the frequency variation of the synthesized beam but not the frequency variation of the primary beam. For low frequencies and large bandwidths, this can be substantial. E.g. 1-2GHz L-band observations result in a variation of a factor of 2. One effect of such a large fractional bandwidth is that in multi-frequency synthesis primary beam nulls will be blurred and the interferometer is sensitive everywhere in the field of view. For spectral slopes, however, a frequency-dependent primary beam causes a steepening as the higher frequencies are less sensitive at a given point away from the phase center. A correction for this effect should be made with the task widebandpbcor (see below).

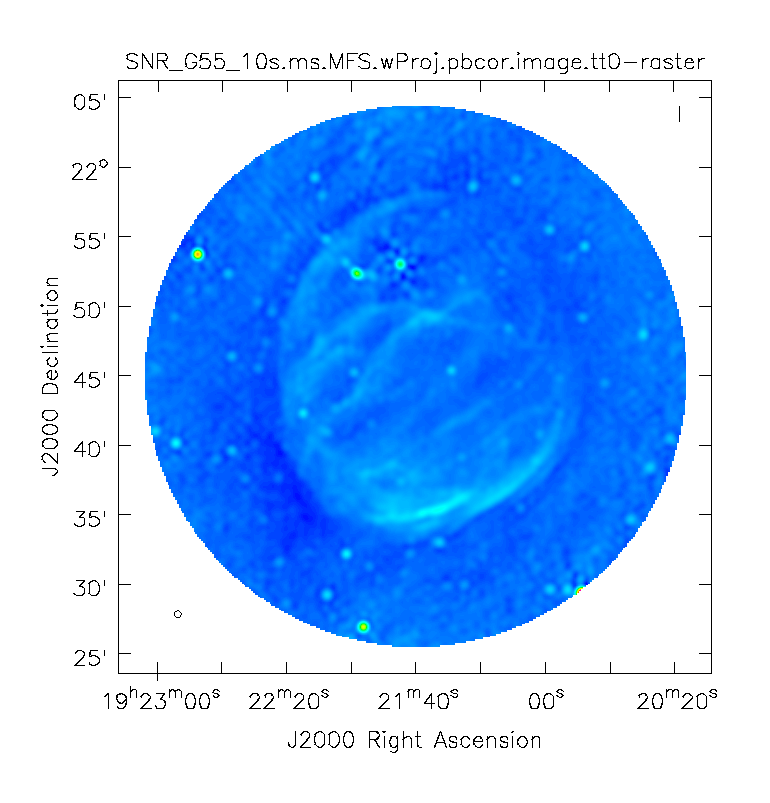

Multi-Scale, Multi-Term Frequency, Widefield CLEAN

Finally, we will combine the W-Projection and MS-MFS algorithms. Be forewarned -- these imaging runs will take a while, and it's best to start them running and then move on to other things.

Using the same parameters for the individual-algorithm images above, but combined into a single clean run, we have:

# In CASA

clean(vis='SNR_G55_10s.calib.ms', imagename='SNR_G55_10s.ms.MFS.wProj',

gridmode='widefield', imsize=1280, cell='8arcsec', mode='mfs',

nterms=2, wprojplanes=-1, multiscale=[0,6,10,30,60],

interactive=False, niter=1000, weighting='briggs',

stokes='I', threshold='0.1mJy', usescratch=F, imagermode='csclean')

viewer('SNR_G55_10s.ms.MFS.wProj.image.tt0')

viewer('SNR_G55_10s.ms.MFS.wProj.image.alpha')

Again, looking at the same outlier source, we can see that the major sources of error have been removed, although there are still some residual artifacts (Fig. 14). One possible source of error is the time-dependent variation of the primary beam; another is the fact that we have only used nterms=2, which may not be sufficient to model the spectra of some of the point sources. Some weak RFI may also show up that may need additional flagging.

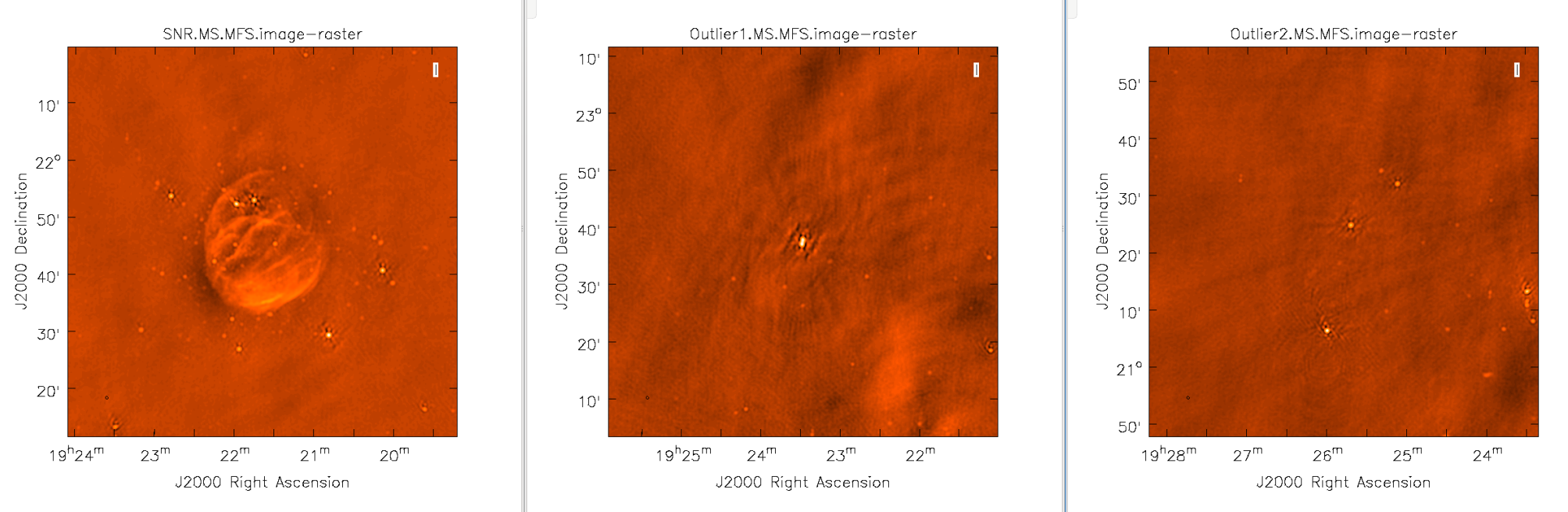

Imaging Outlier Fields

When strong sources are far away from the main target but still strong enough to produce sidelobes in the main image, they should be cleaned. Sometimes, however, it is not practical to image very large images for this purpose. An alternative is to use outlier fields. This mode will allow the user to specify a few locations that are then being cleaned along with the main image. The outlier fields should be centered on strong sources that, e.g., are known from sky catalogs or are identified by other means.

Outlier fields are specified in an outlier file (here: 'outliers.txt') that contains the names, sizes, and positions (see Sect. 5.3.18.1 of the CASA cookbook):

#content of outliers.txt # #outlier field1 imagename= 'Outlier1.MS.MFS' imsize=[512,512] phasecenter = 'J2000 19h23m27.693 22d37m37.180' # #outlier field2 imagename='Outlier2.MS.MFS' imsize=[512,512] phasecenter = 'J2000 19h25m46.888 21d22m03.365'

clean will then be executed like the following:

# In CASA

clean(vis='SNR_G55_10s.calib.ms', imagename='SNR.MS.MFS-Main',

outlierfile='outliers.txt',

imsize=[512,512], cell='8arcsec', mode='mfs',

multiscale=[0,6,10,30,60], interactive=False, niter=1000, weighting='briggs',

robust=0, stokes='I', threshold='0.1mJy', usescratch=F, imagermode='csclean')

- outlierfile='outliers.txt': the name of the outlier file.

Primary Beam Correction

In interferometry, the images formed via deconvolution are representations of the sky, multiplied by the primary beam response of the antenna. The primary beam can be described by a Gaussian with a size depending on the observing frequency (see above). Images produced via clean are by default not corrected for the primary beam pattern (important for mosaics), and therefore do not have the correct flux away from the phase center.

Correcting for the primary beam, however, can be done during clean by using the pbcor parameter. It can also be done after imaging using the task impbcor for regular data sets, and widebandpbcor for those that use Taylor-term expansion (nterms > 1). A third possibility, is utilizing the task immath to manually divide the <imagename>.image by the <imagename>.flux images (<imagename>.flux.pbcoverage for mosaics).

Flux corrected images usually don't look pretty, due to the noise at the edges being increased. Flux densities, however, should only be calculated from primary beam corrected images. Let's run the impbcor task to correct our multiscale image.

# In CASA

impbcor(imagename='SNR_G55_10s.MultiScale.image', pbimage='SNR_G55_10s.MultiScale.flux',

outfile='SNR_G55_10s.MS.pbcorr.image')

- imagename: the image to be corrected

- pbimage: the <imagename>.flux image as a representation of the primary beam (<imagename>.flux.pbcoverage for mosaics)

Let us now use the widebandpbcor task for wideband (nterms>1) images. Note that for this task, we will be supplying the image name that is the prefix for the Taylor expansion images, tt0 and tt1, which must be on disk. Such files were created, e.g., during the last Multi-Scale, Multi-Frequency, Widefield run of CLEAN.

# In CASA

widebandpbcor(vis='SNR_G55_10s.calib.ms', imagename='SNR_G55_10s.ms.MFS.wProj',

nterms=2, action='pbcor', pbmin=0.2, spwlist=[0,1,2,3],

weightlist=[0.5,1.0,0,1.0], chanlist=[63,63,63,63], threshold='0.1Jy')

- spwlist=[0,1,2,3]: We want to apply this correction to all spectral windows in our calibrated measurement set.

- weightlist=[0.5,1.0,0,1.0]: Since we did not specify reference frequencies during clean, the widebandpbcor task will pick them from the provided image. Running the task, the logger reports the multiple frequencies used for the primary beam, which are 1.256, 1.429, 1.602, and 1.775 GHz. Having created an amplitude vs. frequency plot of the calibrated measurement set with colorized spectral windows using plotms, we notice that the first chosen frequency lies within spectral window 0, which we know had lots of flagged data due to lots of RFI being present. This weightlist parameter allows us to give this chosen frequency less weight. The primary beam at 1.6GHz lies in an area with no data, therefore we will give a weight value of zero for this frequency. The remaining frequencies 1.429 and 1.775 GHz lie within spectral windows which contained less RFI, therefore we provide a larger weight percentage.

- pbmin=0.2: Gain level below which not to compute Taylor-coefficients or apply a primary beam correction.

- chanlist=[63,63,63,63]: Our measurement set contains 64 channels, including zero.

- threshold='0.1Jy': Threshold in the intensity map, below which not to recalculate the spectral index.

It's important to note that the image will cut off at about 20% of the HPBW, as we are confident of the accuracy within this percentage. Anything outside becomes less accurate, thus there is a mask associated with the creation of the corrected primary beam image (Fig. 16). Spectral indices may still be unreliable below approximately 70% of the primary beam HPBW.

It would be a good exercise to use the viewer to plot both the primary beam corrected image, and the original cleaned image and compare the intensity (Jy/beam) values, which should differ slightly.

Imaging Spectral Cubes

For spectral line imaging, CASA clean can be used in either mode='frequency' or mode='velocity' . Both will create a spectral axis in frequency. The velocity mode adds an additional velocity label to it.

The following keywords are important for spectral modes (velocity in this example):

# In CASA

mode = 'velocity' # Spectral gridding type (mfs, channel, velocity, frequency)

nchan = -1 # Number of channels (planes) in output image; -1 = all

start = '' # Velocity of first channel: e.g '0.0km/s'(''=first channel in first SpW of MS)

width = '' # Channel width e.g '-1.0km/s' (''=width of first channel in first SpW of MS)

<snip>

outframe = '' # spectral reference frame of output image; '' =input

veltype = 'radio' # Velocity definition of output image

<snip>

restfreq = '' # Rest frequency to assign to image (see help)

The spectral dimension of the output cube will be defined by these parameters and clean will regrid the visibilities to it. Note that invoking cvel before imaging is in most cases not necessary, even when two or more measurement sets are being provided at the same time.

The cube is specified by a start velocity in km/s, the nchan number of channels and a channel width (where the latter can also be negative for decreasing velocity cubes).

To correct Doppler motions, clean also requires a velocity frame, where LSRK and BARY are the most popular Local Standard of Rest (kinematic) and sun-earth Barycenter references. doppler defines whether the data will be gridded via the optical or radio velocity definition. A description of the available options and definitions can be found in the VLA observing guide and the CASA cookbook. By default, clean will produce a cube in LRSK and radio.

Note that clean will always work on the entire cube when searching for the highest residual fluxes in the minor cycle. To do this per channel, one can set chaniter=T.

CASA also offers mode='channel' . But we do not recommend this mode as the VLA does not observe with Doppler tracking. In other words, the fixed frequency observing of the VLA will let any spectral feature move through frequency space in time. The Doppler correction will then be in clean via mode='velocity' or 'frequency' and not on the telescope itself. mode='channel' can also be confusing when imaging some shifted, but overlapping spectral windows.

An example of spectral line imaging procedures is provided in the EVLA high frequency Spectral Line tutorial - IRC+10216.

Beam per Plane

For large spectral cubes, the synthesized beam can vary substantially across the channels. To account for this, CASA will calculate separate beams for each channel when the difference is more than half a pixel across the cube. All CASA image analysis tasks are capable to handle such cubes.

If it is desired to have a cube with a single synthesized beam, two options are available. Best is to use imsmooth with kernel='commonbeam' . This task will smooth each plane to that of the lowest resolution. It will also clean up all header variables such that only a single beam appears in the data. The second option is to define resmooth=T directly in clean.

Image Header

The image header holds meta data associated with your CASA image. The task imhead will display this data within the casalog. We will first run imhead with mode='summary' :

# In CASA

imhead(imagename='SNR_G55_10s.ms.MFS.wProj.image.tt0', mode='summary')

- mode='summary' : gives general information about the image, including the object name, sky coordinates, image units, the telescope the data was taken with, and more.

For further information about the image, let's now run it with mode='list' :

# In CASA

imhead(imagename='SNR_G55_10s.ms.MFS.wProj.image.tt0', mode='list')

- mode='list' : gives more detailed information, including beam major/minor axes, beam primary angle, and the location of the max/min intensity, and lots more. Essentially this mode displays the FITS header variables.

Next, we convert our image from intensity to main beam brightness temperature.

We will use the standard equation

[math]\displaystyle{ T=1.222\times 10^{6} \frac{S}{\nu^{2} \theta_{maj} \theta_{min}} }[/math]

where the main beam brightness temperature [math]\displaystyle{ T }[/math] is given in K, the intensity [math]\displaystyle{ S }[/math] in Jy/beam, the reference frequency [math]\displaystyle{ \nu }[/math] in GHz, and the major and minor beam sizes [math]\displaystyle{ \theta }[/math] in arcseconds.

For a beam of 29.30"x29.03" and a reference frequency of 1.579GHz (as taken from the previous imhead run) we calculate the brightness temperature using immath:

# In CASA

immath(imagename='SNR_G55_10s.ms.MFS.wProj.image.tt0', mode='evalexpr',

expr='1.222e6*IM0/1.579^2/(29.30*29.03)',

outfile='SNR_G55_10s.ms.MFS.wProj.image.tt0-Tb')

- mode='evalexpr' : immath is used to calcuate with images

- expr: the mathematical expression to be evaluated. The images are abbreviated as IM0, IM1, ... in the sequence of their appearance in the imagename parameter.

Finally, we will change the unit of the new image header to 'K'. To do this, we will run imhead with mode='put' :

# In CASA

imhead(imagename='SNR_G55_10s.ms.MFS.wProj.image.tt0-Tb', mode='put', hdkey='bunit', hdvalue='K')

- hdkey: the header keyword that will be changed

- hdvalue: the new value for header keyword

Launching the viewer will now show our image in brightness temperature units:

# In CASA

viewer('SNR_G55_10s.ms.MFS.wProj.image.tt0-Tb')

Last checked on CASA Version 4.5.2