VLA Self-calibration Tutorial-CASA6.4.1: Difference between revisions

| (96 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

<i>This CASA Guide is for Version 6.4.1 of CASA, and was last checked with CASA 6.5.4.</i> | |||

<!--<div style="background-color: salmon"> | <!--<div style="background-color: salmon"> | ||

<div style="background-color: salmon; margin: 20px"> | <div style="background-color: salmon; margin: 20px"> | ||

| Line 9: | Line 10: | ||

__TOC__ | __TOC__ | ||

| Line 17: | Line 19: | ||

Both standard calibration and selfcal work by comparing the visibility data with a model to solve for calibration solutions. With standard calibration, we are usually provided a model of our calibrator source by the observatory (e.g., VLA Flux-density calibrators) or we adopt a simple model (e.g., a 1 Jy point source at the phase center is a common assumption for VLA phase calibrators). With self-calibration we need to set a model for our target source, e.g., by imaging the target visibilities. Then for both standard calibration and selfcal we solve for calibration solutions after making choices about the solution interval, signal-to-noise, etc. When applying the standard calibration solutions we use interpolation to correct the target data, but for selfcal we apply the calibration solutions directly to the target field from which they were derived. For additional details about self-calibration, see [https://ui.adsabs.harvard.edu/abs/1999ASPC..180..187C/abstract Lecture 10] of Synthesis Imaging in Radio Astronomy II (eds. Taylor, Carilli & Perley). | Both standard calibration and selfcal work by comparing the visibility data with a model to solve for calibration solutions. With standard calibration, we are usually provided a model of our calibrator source by the observatory (e.g., VLA Flux-density calibrators) or we adopt a simple model (e.g., a 1 Jy point source at the phase center is a common assumption for VLA phase calibrators). With self-calibration we need to set a model for our target source, e.g., by imaging the target visibilities. Then for both standard calibration and selfcal we solve for calibration solutions after making choices about the solution interval, signal-to-noise, etc. When applying the standard calibration solutions we use interpolation to correct the target data, but for selfcal we apply the calibration solutions directly to the target field from which they were derived. For additional details about self-calibration, see [https://ui.adsabs.harvard.edu/abs/1999ASPC..180..187C/abstract Lecture 10] of Synthesis Imaging in Radio Astronomy II (eds. Taylor, Carilli & Perley). | ||

In this guide, we will create a model using the target data (by running | In this guide, we will create a model using the target data (by running {{tclean_6.5.4}}) and use this model to solve for and apply calibration solutions (by running {{gaincal_6.5.4}} and {{applycal_6.5.4}}). <!--, then iteratively improve this model with further rounds of selfcal.--> This is the most common procedure, but there are other variants that are outside the scope of this guide. For example, your initial model for the target may come from fitting a model to the visibilities instead of imaging, or may be based on ''a priori'' knowledge of the target field. <!-- Some applications of selfcal may use other calibration tasks, e.g., {{bandpass}}, instead of or in addition to {{gaincal_6.5.4}}. --> | ||

Each "round" of self-calibration presented here will follow the same general procedure: | Each "round" of self-calibration presented here will follow the same general procedure: | ||

# Create an initial model by conservatively cleaning the target field (see Section [[#The Initial Model|The Initial Model]]). | # Create an initial model by conservatively cleaning the target field (see Section [[#The Initial Model|The Initial Model]]). | ||

# Use ''' | # Use '''{{gaincal_6.5.4}}''' with an initial set of parameters to calculate a calibration table (see Section [[#Solving for the First Self-Calibration Table|Solving for the First Self-Calibration Table]]). | ||

# Inspect the calibration solutions using ''' | # Inspect the calibration solutions using '''{{plotms_6.5.4}}''' (see Section [[#Plotting the First Self-Calibration Table|Plotting the First Self-Calibration Table]]). | ||

# Optimize the calibration parameters (see Sections [[#Examples of Various Solution Intervals|Examples of Various Solution Intervals]] and [[#Comparing the Solution Intervals|Comparing the Solution Intervals]]). | # Optimize the calibration parameters (see Sections [[#Examples of Various Solution Intervals|Examples of Various Solution Intervals]] and [[#Comparing the Solution Intervals|Comparing the Solution Intervals]]). | ||

# Use ''' | # Use '''{{applycal_6.5.4}}''' to apply the table of solutions to the data (see Section [[#Applying the First Self-Calibration Table|Applying the First Self-Calibration Table]]). | ||

# Use ''' | # Use '''{{tclean_6.5.4}}''' to produce the self-calibrated image (see Section [[#Imaging the Self-calibrated Data|Imaging the Self-calibrated Data]]). | ||

<!-- | <!-- | ||

# Use ''' | # Use '''{{split_6.5.4}}''' to write out the calibrated data with the applied solutions, the starting point for the next round of selfcal. | ||

# Start the next round of selfcal. | # Start the next round of selfcal. | ||

| Line 38: | Line 40: | ||

* Advanced topics related to selfcal (e.g., peeling) --> | * Advanced topics related to selfcal (e.g., peeling) --> | ||

The data set in this guide is a VLA observation of a massive galaxy cluster, MOO J1506+5137, at z=1.09 and is part of the Massive and Distant Clusters of ''WISE'' Survey (MaDCoWS: [https://ui.adsabs.harvard.edu/abs/2019ApJS..240...33G/abstract Gonzalez et al. 2019]). MOO J1506+5137 stands out in the MaDCoWS sample due to its high radio activity. From the 1300 highest significance MaDCoWS clusters in the FIRST footprint, a sample of ~50 clusters with extended radio sources defined as having at least one FIRST source with a deconvolved size exceeding 6.5" within 1' of the cluster center was identified. This sample was observed with the VLA (PI: Gonzalez, 16B-289, 17B-197; PI: Moravec, 18A-039) as a part of a larger study ([https://ui.adsabs.harvard.edu/abs/2020ApJ...888...74M/abstract Moravec et al. 2020a]). Through these follow-up observations, it was discovered that MOO J1506+5137 had high radio activity compared to other clusters in the sample with five radio sources of which three had complex structure and two were bent-tail sources. The scientific question at hand is, why does this cluster have such high radio activity? The VLA data showcased in this tutorial, combined with other data sets, suggest that the exceptional radio activity among the massive galaxy population is linked to the dynamical state of the cluster ([https://ui.adsabs.harvard.edu/abs/2020ApJ...898..145M/abstract Moravec et al. 2020b]). | The data set in this guide is a VLA observation of a massive galaxy cluster, MOO J1506+5137, at z=1.09 and is part of the Massive and Distant Clusters of ''WISE'' Survey (MaDCoWS: [https://ui.adsabs.harvard.edu/abs/2019ApJS..240...33G/abstract Gonzalez et al. 2019]). [Note: The target name in the data set is "MOO_1506+5136."] MOO J1506+5137 stands out in the MaDCoWS sample due to its high radio activity. From the 1300 highest significance MaDCoWS clusters in the FIRST footprint, a sample of ~50 clusters with extended radio sources defined as having at least one FIRST source with a deconvolved size exceeding 6.5" within 1' of the cluster center was identified. This sample was observed with the VLA (PI: Gonzalez, 16B-289, 17B-197; PI: Moravec, 18A-039) as a part of a larger study ([https://ui.adsabs.harvard.edu/abs/2020ApJ...888...74M/abstract Moravec et al. 2020a]). Through these follow-up observations, it was discovered that MOO J1506+5137 had high radio activity compared to other clusters in the sample with five radio sources of which three had complex structure and two were bent-tail sources. The scientific question at hand is, why does this cluster have such high radio activity? The VLA data showcased in this tutorial, combined with other data sets, suggest that the exceptional radio activity among the massive galaxy population is linked to the dynamical state of the cluster ([https://ui.adsabs.harvard.edu/abs/2020ApJ...898..145M/abstract Moravec et al. 2020b]). | ||

We would like to note that the CASAviewer has not been maintained for a few years and will be removed from future versions of CASA. | |||

The NRAO replacement visualization tool for images and cubes is CARTA, the “Cube Analysis and Rendering Tool for Astronomy”. It is available from the [https://cartavis.org/ CARTA] website. We strongly recommend to use CARTA, as it provides a much more efficient, stable, and feature rich user experience. A comparison of the CASAviewer and CARTA, as well as instructions on how to use CARTA at NRAO is provided in the respective [https://casadocs.readthedocs.io/en/v6.5.4/notebooks/carta.html CARTA section of the CASA docs]. This tutorial shows Figures generated with CARTA for visualization. | |||

Finally, while not used in the 6.4.1 version of this guide, we would like to note that there is a new parameter ''nmajor'' introduced in [https://casadocs.readthedocs.io/en/v6.5.2/api/tt/casatasks.imaging.tclean.html CASA 6.5 tclean]. | |||

== When to Use Self-calibration == | == When to Use Self-calibration == | ||

| Line 55: | Line 62: | ||

* When there is a bright outlying source with direction-dependent calibration errors | * When there is a bright outlying source with direction-dependent calibration errors | ||

It can be difficult to determine the origin of an image artifact based solely on its appearance, especially without a lot of experience in radio astronomy. But generally speaking, the errors that selfcal will help address will be convolutional in nature and direction-independent. This means that every source of real emission in the image will have an error pattern of the same shape, and the brightness of the error pattern will scale with the brightness of the source. If the error pattern is symmetric (an even function) then it is most likely dominated by an error in visibility amplitude, and if the error pattern is asymmetric (an odd function) then it is probably due to an error in visibility phase. Selfcal can address both amplitude and phase errors. For a more complete discussion on error recognition, see [https://ui.adsabs.harvard.edu/abs/1999ASPC..180..321E/abstract Lecture 15] of Synthesis Imaging in Radio Astronomy II (eds. Taylor, Carilli & Perley). | It can be difficult to determine the origin of an image artifact based solely on its appearance, especially without a lot of experience in radio astronomy. But generally speaking, the errors that selfcal will help address will be convolutional in nature and direction-independent. This means that every source of real emission in the image will have an error pattern of the same shape, and the brightness of the error pattern will scale with the brightness of the source. If the error pattern is symmetric (an even function), then it is most likely dominated by an error in visibility amplitude, and if the error pattern is asymmetric (an odd function), then it is probably due to an error in visibility phase. Selfcal can address both amplitude and phase errors. For a more complete discussion on error recognition, see [https://ui.adsabs.harvard.edu/abs/1999ASPC..180..321E/abstract Lecture 15] of Synthesis Imaging in Radio Astronomy II (eds. Taylor, Carilli & Perley). | ||

<!--'''EM''': could we expound upon how one could tell the difference between the cases in which selfcal WILL help and bullets 1,2, and 4 when selfcal will not help? What do those actually look like? How does one identify each type of error? People who are just starting may not know how to tell the difference between these different types of errors in an image. To be honest, I am not sure I could tell you what the last 4 bullets looks like. --> | <!--'''EM''': could we expound upon how one could tell the difference between the cases in which selfcal WILL help and bullets 1,2, and 4 when selfcal will not help? What do those actually look like? How does one identify each type of error? People who are just starting may not know how to tell the difference between these different types of errors in an image. To be honest, I am not sure I could tell you what the last 4 bullets looks like. --> | ||

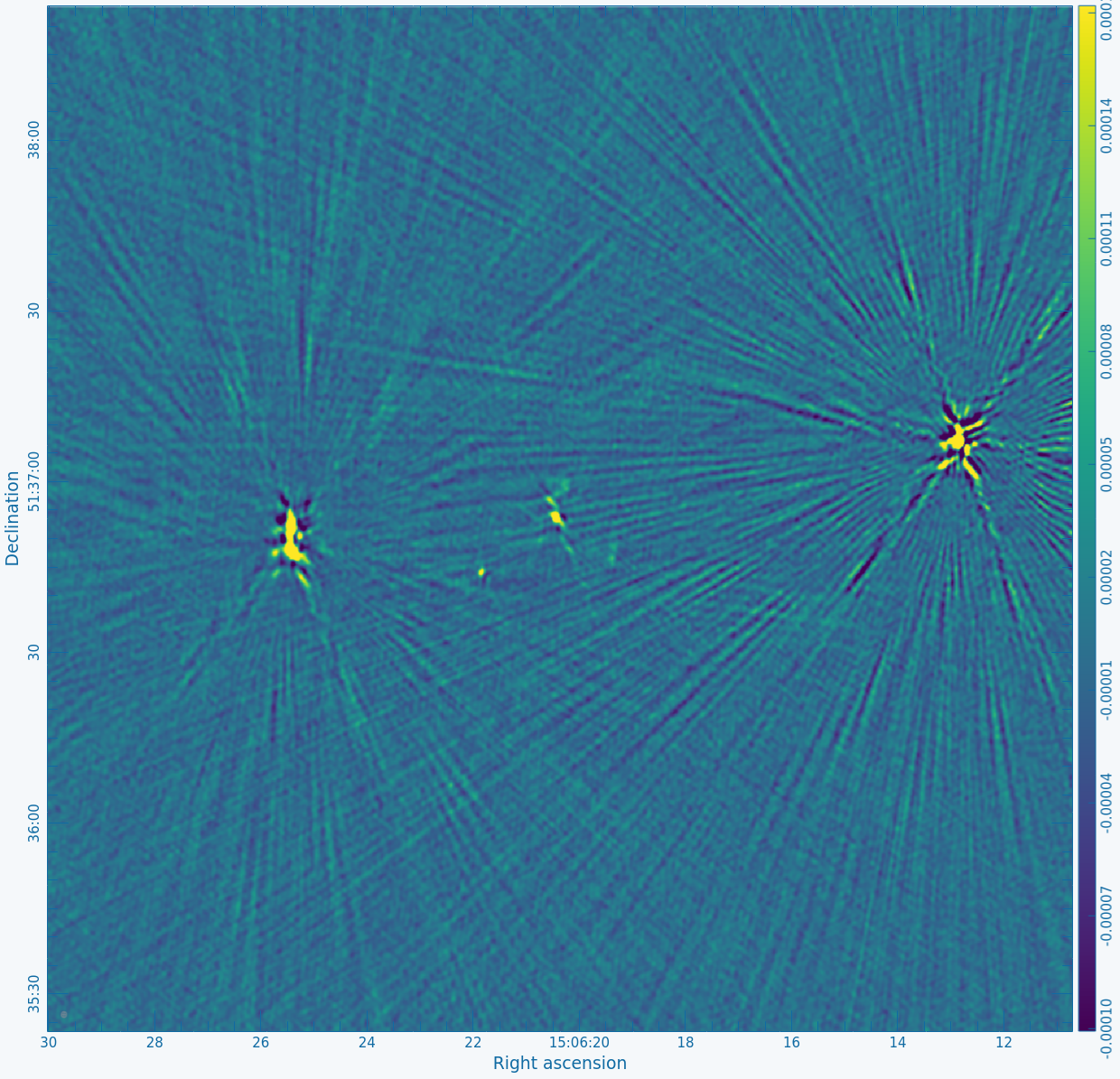

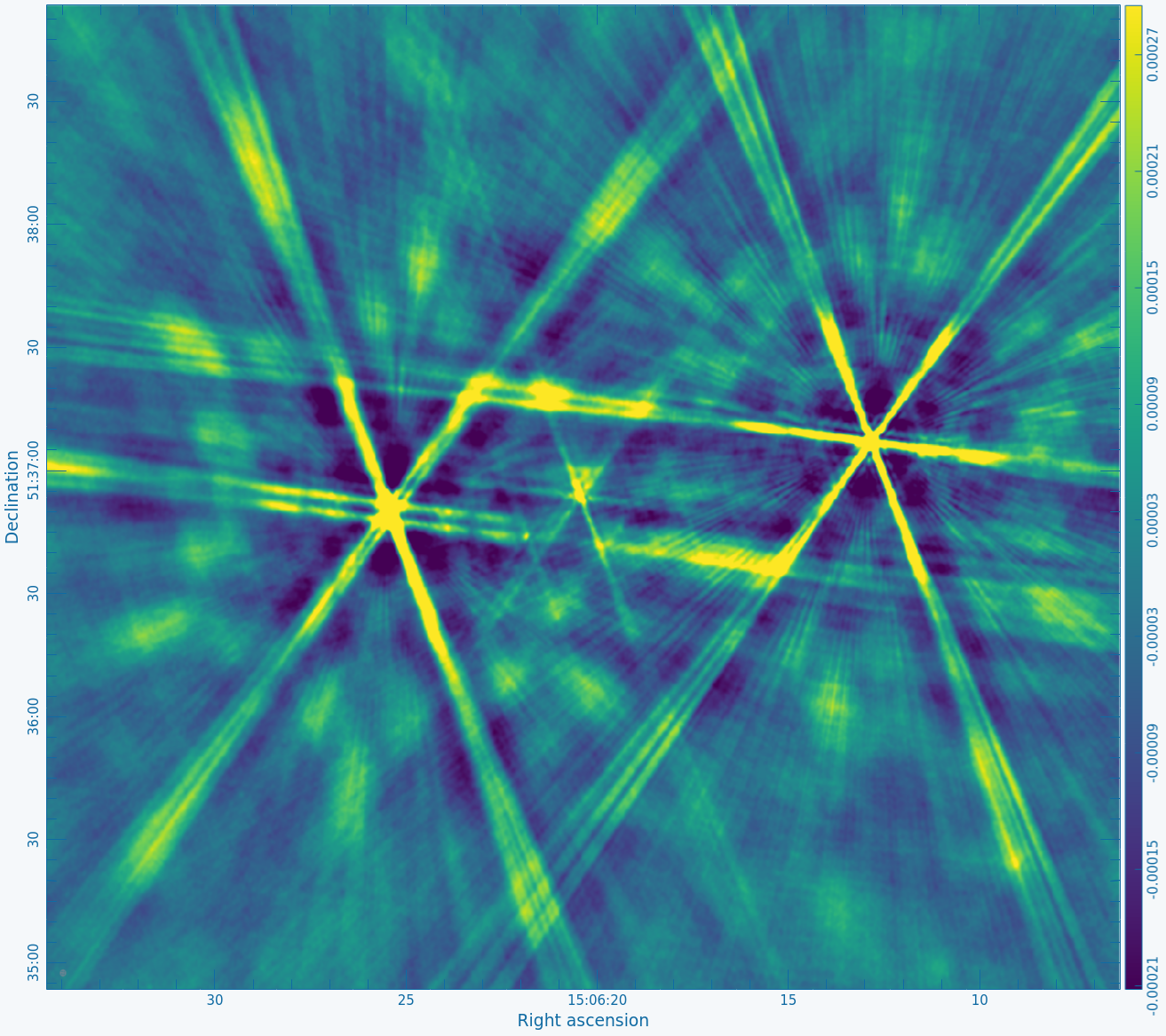

In the case of this guide, we believed that these data were a good candidate for selfcal because there were extensive artifacts centered on the source of interest (something very closely resembling Figure 4A) after an initial cleaning. These errors manifested as strong sidelobes radiating out from the sources of strong emission and with a shape that resembles the VLA dirty beam (i.e., a shape that is related to the observation's UV coverage). The artifacts did not lessen as we cleaned more deeply but instead appeared stronger relative to the residual image. Therefore, because phase and/or amplitude calibration errors could be a potential cause for the artifacts, and because the target source is relatively bright, we thought that selfcal could help improve the image quality. | In the case of this guide, we believed that these data were a good candidate for selfcal because there were extensive artifacts centered on the source of interest (something very closely resembling Figure 4A) after an initial cleaning. These errors manifested as strong sidelobes radiating out from the sources of strong emission and with a shape that resembles the VLA dirty beam (i.e., a shape that is related to the observation's UV coverage). The artifacts did not lessen as we cleaned more deeply but instead appeared stronger relative to the residual image. Therefore, because phase and/or amplitude calibration errors could be a potential cause for the artifacts, and because the target source is relatively bright, we thought that selfcal could help improve the image quality. | ||

== Data for this Tutorial == | == Data for this Tutorial == | ||

| Line 77: | Line 83: | ||

=== Observation Details === | === Observation Details === | ||

First, we will start CASA in the directory containing the data and then collect some basic information about the observation. The task | First, we will start CASA in the directory containing the data and then collect some basic information about the observation. This guide is meant to be used with monolithic CASA and not pip-wheel, because the GUIs are not necessarily validated. The task {{listobs_6.5.4}} can be used to display the individual scans comprising the observation, the frequency setup, source list, and antenna locations. The {{listobs_6.5.4}} task returns an output dictionary that we will store as a variable, otherwise the contents of this dictionary will be printed to the console. You may optionally specify an output text file which the output will then be written to instead of the CASA log file. | ||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

listobs_output = listobs(vis='17B-197.sb34290063.eb34589992.58039.86119096065.ms') | listobs_output = listobs(vis='17B-197.sb34290063.eb34589992.58039.86119096065.ms', listfile='listobs.txt') | ||

</source> | </source> | ||

A portion of the | A portion of the {{listobs_6.5.4}} output is shown below, as it appears in the logger window and the CASA log file or specified output file. | ||

<pre style="background-color: #fffacd;"> | <pre style="background-color: #fffacd;"> | ||

| Line 138: | Line 144: | ||

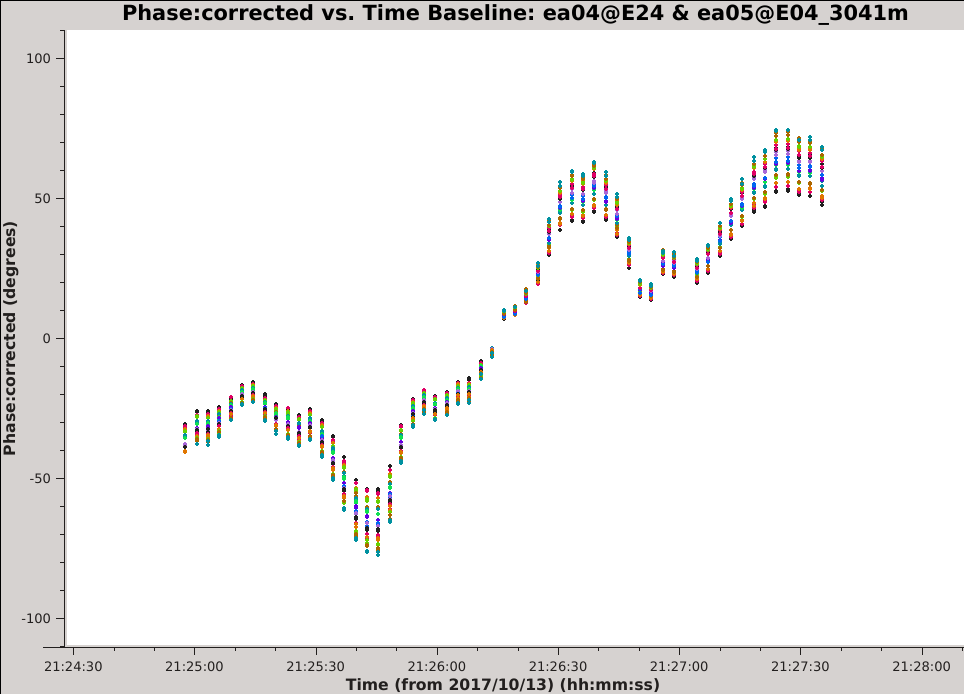

Since we have obtained the calibrated visibilites for the calibrator fields, we can now take this opportunity to investigate the phase stability in these observations. It is easier to do this inspection on a bright calibrator field where the signal-to-noise is high, and we will assume that the same degree of stability is present throughout the observation. In this section, we will characterize the magnitude and timescale of the phase fluctuations that we will be trying to correct for with selfcal. | Since we have obtained the calibrated visibilites for the calibrator fields, we can now take this opportunity to investigate the phase stability in these observations. It is easier to do this inspection on a bright calibrator field where the signal-to-noise is high, and we will assume that the same degree of stability is present throughout the observation. In this section, we will characterize the magnitude and timescale of the phase fluctuations that we will be trying to correct for with selfcal. | ||

Looking at the output of | Looking at the output of {{listobs_6.5.4}} we see that there is a long scan on the amplitude calibrator, 3C286 (field ID 2). A feature of the VLA CASA pipeline is that it only applies scan-averaged calibration solutions to the calibrator fields, so it will not have corrected for any variations within a scan. We will plot the calibrated phase vs. time for a single antenna, paging by baseline: | ||

<source lang="python"> | <source lang="python"> | ||

| Line 144: | Line 150: | ||

plotms(vis='17B-197.sb34290063.eb34589992.58039.86119096065.ms', xaxis='time', yaxis='phase', ydatacolumn='corrected', field='2', antenna='ea05', correlation='RR,LL', avgchannel='64', iteraxis='baseline', coloraxis='spw') | plotms(vis='17B-197.sb34290063.eb34589992.58039.86119096065.ms', xaxis='time', yaxis='phase', ydatacolumn='corrected', field='2', antenna='ea05', correlation='RR,LL', avgchannel='64', iteraxis='baseline', coloraxis='spw') | ||

</source> | </source> | ||

[[Image:Selfcal 3c286 phase.png| | [[Image:Selfcal 3c286 phase.png|400px|thumb|right|Figure 1: The phase vs. time on the ea04-ea05 baseline for field 2.]] | ||

* '' vis='17B-197.sb34290063.eb34589992.58039.86119096065.ms' '': To plot visibilities from the pipeline calibrated MS. | * '' vis='17B-197.sb34290063.eb34589992.58039.86119096065.ms' '': To plot visibilities from the pipeline calibrated MS. | ||

* '' xaxis='time', yaxis='phase' '': To set time as the x-axis and phase as the y-axis of the plot. | * '' xaxis='time', yaxis='phase' '': To set time as the x-axis and phase as the y-axis of the plot. | ||

* '' ydatacolumn='corrected' '': To plot the calibrated data (from the CORRECTED_DATA column). | * '' ydatacolumn='corrected' '': To plot the calibrated data (from the CORRECTED_DATA column). | ||

* '' field='2' '': To select visibilities from field ID 2, i.e., the amplitude calibrator | * '' field='2' '': To select visibilities from field ID 2, i.e., the amplitude calibrator 3C286. | ||

* '' antenna='ea05', iteraxis='baseline' '': To view a single baseline at a time. Any single antenna can be chosen here. | * '' antenna='ea05', iteraxis='baseline' '': To view a single baseline at a time. Any single antenna can be chosen here. | ||

* '' correlation='RR,LL' '': To plot both parallel-hand correlation products. | * '' correlation='RR,LL' '': To plot both parallel-hand correlation products. | ||

| Line 155: | Line 161: | ||

Use the 'Next Iteration' button of the | Use the 'Next Iteration' button of the {{plotms_6.5.4}} GUI to cycle through additional baselines. You should see plots that look similar to the example image of the ea04-ea05 baseline (see Figure 1). The plotted data have a mean of zero phase because the pipeline calibration solutions have already been applied. The phase is seen to vary with time over a large range (in some cases more than +/- 100 degrees) and the variations appear to be smooth over time scales of a few integrations. For a given baseline, we can see that all of the spectral windows and both correlations approximately follow the same trend with time. Additionally, the magnitude of the phase variations is larger for the higher frequency spectral windows, a pattern that is consistent with changes in atmospheric density. | ||

'''Optional extra steps:''' Create and inspect similar plots using scan 2 of the phase calibrator field (J1549+5038). Repeat for baselines to other antennas. | '''Optional extra steps:''' Create and inspect similar plots using scan 2 of the phase calibrator field (J1549+5038). Repeat for baselines to other antennas. | ||

| Line 161: | Line 167: | ||

=== Splitting the Target Visibilities === | === Splitting the Target Visibilities === | ||

CASA calibration tasks always operate by comparing the visibilities in the DATA column to the source model, where the source model is given by either the MODEL_DATA column, a model image or component list, or the default model of a 1 Jy point source at the phase center. For example, the calibration pipeline used the raw visibilities in the DATA column to solve for calibration tables and then created the CORRECTED_DATA column by applying these tables to the DATA column. With this context in mind, '''an essential step for self-calibration is to | CASA calibration tasks always operate by comparing the visibilities in the DATA column to the source model, where the source model is given by either the MODEL_DATA column, a model image or component list, or the default model of a 1 Jy point source at the phase center. For example, the calibration pipeline used the raw visibilities in the DATA column to solve for calibration tables and then created the CORRECTED_DATA column by applying these tables to the DATA column. With this context in mind, '''an essential step for self-calibration is to {{split_6.5.4}} the calibrated visibilities for the target we want to self-calibrate,''' meaning that the visibilities of the target source get copied from the CORRECTED_DATA column of the pipeline calibrated MS to the DATA column of a new measurement set. Self-calibration will work in the same way as the initial calibration, i.e., by comparing the pipeline calibrated visibilities (which are now in the DATA column of the new {{split_6.5.4}} MS) to a model, solving for self-calibration tables, and then creating a new CORRECTED_DATA column by applying the self-calibration tables. If we did not {{split_6.5.4}} the data, we would need to constantly re-apply all of the calibration tables from the pipeline (both on-the-fly when computing the self-calibration solutions and then again when applying the self-calibration), which would make the process much more cumbersome. | ||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

split(vis='17B-197.sb34290063.eb34589992.58039.86119096065.ms',datacolumn='corrected',field='1', correlation='RR,LL', outputvis='obj.ms') | split(vis='17B-197.sb34290063.eb34589992.58039.86119096065.ms', datacolumn='corrected', field='1', correlation='RR,LL', outputvis='obj.ms') | ||

</source> | </source> | ||

* ''vis='17B-197.sb34290063.eb34589992.58039.86119096065.ms' '': The input visibilities for | * ''vis='17B-197.sb34290063.eb34589992.58039.86119096065.ms' '': The input visibilities for {{split_6.5.4}}. Here, these are the visibilities produced by the pipeline. | ||

* ''datacolumn='corrected' '': To copy the calibrated visibilities from the input MS. | * ''datacolumn='corrected' '': To copy the calibrated visibilities from the input MS. | ||

* ''field='1' '': The field ID of the target we want to self-calibrate. | * ''field='1' '': The field ID of the target we want to self-calibrate. | ||

* ''correlation='RR,LL' '': To select only the parallel hand correlations. This will make the output data set smaller by about a factor of two. | * ''correlation='RR,LL' '': To select only the parallel hand correlations. This will make the output data set smaller by about a factor of two. | ||

* ''outputvis='obj.ms' '': The name of the new measurement set that | * ''outputvis='obj.ms' '': The name of the new measurement set that {{split_6.5.4}} will create. | ||

<!-- The output MS can be can be directly downloaded here: [http://www.aoc.nrao.edu/~jmarvil/selfcal_casaguide/obj.tar.gz '''obj.ms (3.4 GB)'''] --> | <!-- The output MS can be can be directly downloaded here: [http://www.aoc.nrao.edu/~jmarvil/selfcal_casaguide/obj.tar.gz '''obj.ms (3.4 GB)'''] --> | ||

== The Initial Model == | == The Initial Model == | ||

Now that we understand the data a bit better and know that we need to apply self-calibration, we will begin to work our way through the steps outlined in the Introduction (create initial model, create calibration table, inspect solutions, determine best solution interval, | Now that we understand the data a bit better and know that we need to apply self-calibration, we will begin to work our way through the steps outlined in the [[#Introduction|Introduction]] (create initial model, create calibration table, inspect solutions, determine best solution interval, {{applycal_6.5.4}}, {{split_6.5.4}}, next round). We first begin with creating an initial model which {{gaincal_6.5.4}} will compare to the data in order to create the calibration table that will be applied to the data in the first round of self-calibration. | ||

=== Preliminary Imaging === | === Preliminary Imaging === | ||

Prior to solving for self-calibration solutions we need to make an initial model of the target field, which we will generate by deconvolving the target field using the task | Prior to solving for self-calibration solutions we need to make an initial model of the target field, which we will generate by deconvolving the target field using the task {{tclean_6.5.4}}. There are several imaging considerations that we should address when making this model (discussed below). See the [https://casaguides.nrao.edu/index.php?title=VLA_CASA_Imaging VLA CASA Guide on Imaging] for more details about these parameters. | ||

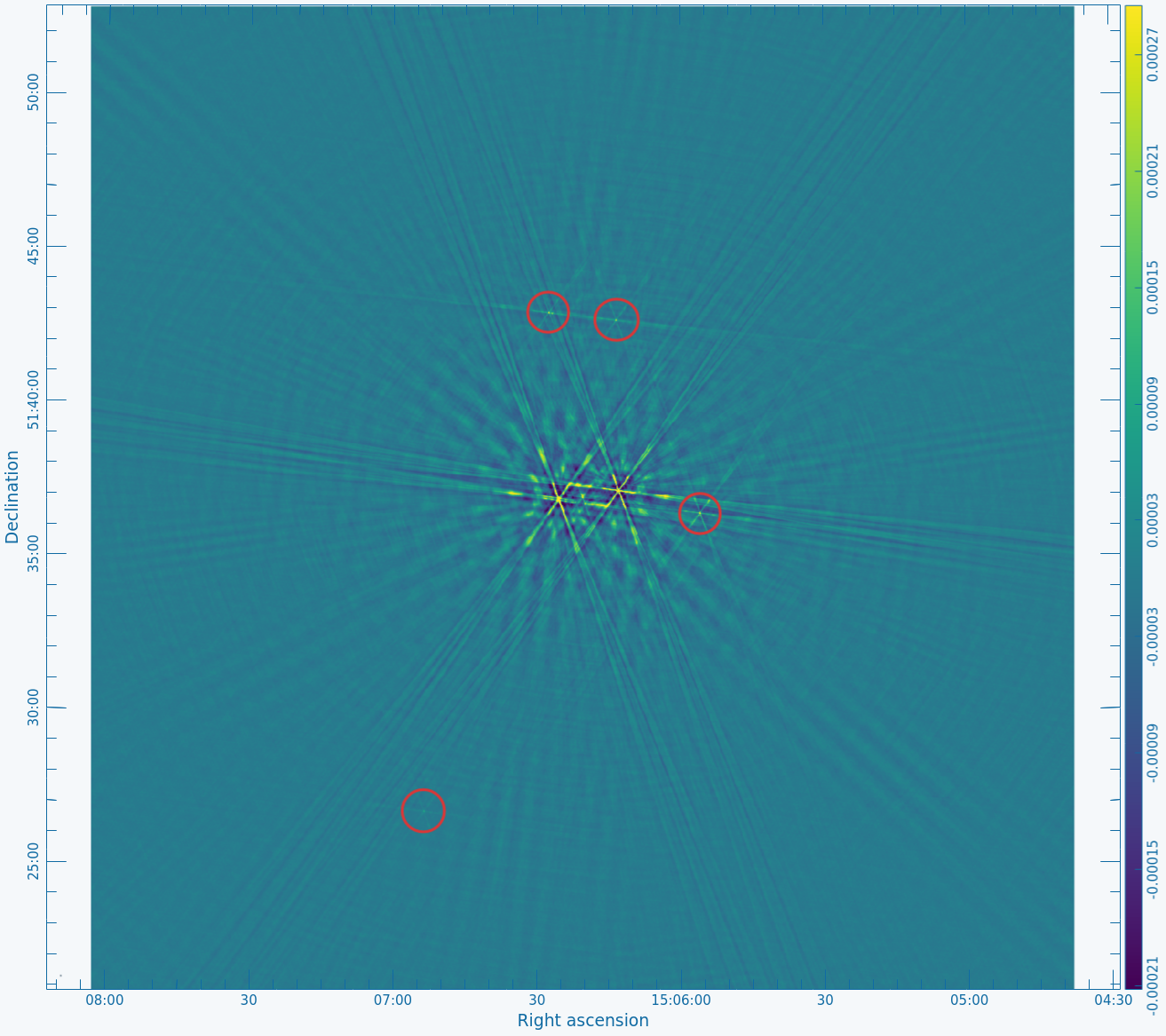

'''Image field-of-view''': Ideally, we want our self-calibration model to include all of the sources present in the data (e.g., sources near the edge of the primary beam or in the first sidelobe). This is typically achieved by making an image large enough to encompass all of the apparent sources, or by making a smaller image of the target plus one or more outlier fields. We will start with a large dirty image of the entire primary beam (PB) in order to better understand the sources in the galaxy cluster plus any background sources that will need to be cleaned. A rule of thumb for the VLA is that the FWHM of the PB in arcminutes is approximately 42 * (1 GHz / nu). At the center frequency of our C-band observations (5.5 GHz) the VLA primary beam is ~8' FWHM. In order to image the entire PB and the first sidelobe we need an image field of view that is about four times larger, so we will choose a 32' field-of-view for our initial image (see [https://casadocs.readthedocs.io/en/v6. | '''Image field-of-view''': Ideally, we want our self-calibration model to include all of the sources present in the data (e.g., sources near the edge of the primary beam or in the first sidelobe). This is typically achieved by making an image large enough to encompass all of the apparent sources, or by making a smaller image of the target plus one or more outlier fields. We will start with a large dirty image of the entire primary beam (PB) in order to better understand the sources in the galaxy cluster plus any background sources that will need to be cleaned. A rule of thumb for the VLA is that the FWHM of the PB in arcminutes is approximately 42 * (1 GHz / nu). At the center frequency of our C-band observations (5.5 GHz) the VLA primary beam is ~8' FWHM. In order to image the entire PB and the first sidelobe we need an image field of view that is about four times larger, so we will choose a 32' field-of-view for our initial image (see [https://casadocs.readthedocs.io/en/v6.5.4/notebooks/synthesis_imaging.html#Wide-Field-Imaging Wide-Field-Imaging] (CASAdocs) and [https://science.nrao.edu/facilities/vla/docs/manuals/oss/performance/fov Field-of-View] (VLA OSS) for further discussion of primary beams). | ||

'''Primary beam mask''': By default, the 'pblimit' parameter will add an image mask everywhere the value of the primary beam is less than | '''Primary beam mask''': By default, the 'pblimit' parameter will add an image mask everywhere the value of the primary beam is less than 20%. We want to turn off this mask, as it would prevent us from viewing the image over our desired field of view. This mask is turned off by setting the magnitude of the 'pblimit' parameter to be negative. The actual value of this parameter is unimportant (but do NOT use 1, -1, or 0) for the imaging we will be doing (i.e., the 'standard', 'widefield' and 'wproject' gridders). | ||

'''Image cell size''': There are a few different ways to estimate the synthesized beam size for these observations taken with the C-band in the B-configuration. For one, we can use [https://science.nrao.edu/facilities/vla/docs/manuals/oss/performance/resolution | '''Image cell size''': There are a few different ways to estimate the synthesized beam size for these observations taken with the C-band in the B-configuration. For one, we can use the [https://science.nrao.edu/facilities/vla/docs/manuals/oss/performance/resolution Resolution table] (VLA OSS), which gives a resolution of 1.0". It is recommended to choose a cell size that will result in at least 5 image pixels across the FWHM of the synthesized beam, therefore we require a cell size of 0.20"/pixel or smaller. | ||

'''Image size in pixels''': We can convert our desired field-of-view to pixels using the cell size: 32' * (60" / 1') * (1 pixel / 0.20") = 9600 pixels. | '''Image size in pixels''': We can convert our desired field-of-view to pixels using the cell size: 32' * (60" / 1') * (1 pixel / 0.20") = 9600 pixels. | ||

<!-- However, when making large images, CASA will run faster if we choose an image size that is optimized for the FFT algorithm. The recommendation is to choose an image size that can be expressed as 5*2^n*3^m. This is so that after CASA applies an internal padding factor of 1.2, the images being used in the FFTs can be broken down into small matrices. So we will choose an optimized image size of 9720 (n=3,m=5) for this image. --> | <!-- However, when making large images, CASA will run faster if we choose an image size that is optimized for the FFT algorithm. The recommendation is to choose an image size that can be expressed as 5*2^n*3^m. This is so that after CASA applies an internal padding factor of 1.2, the images being used in the FFTs can be broken down into small matrices. So we will choose an optimized image size of 9720 (n=3,m=5) for this image. --> | ||

'''Wide-field effects''': Large images may require additional consideration due to non-coplanar baselines (the W-term). In CASA, this is usually addressed by turning on the W-project algorithm. | '''Wide-field effects''': Large images may require additional consideration due to non-coplanar baselines (the W-term). In CASA, this is usually addressed by turning on the W-project algorithm. See [https://casadocs.readthedocs.io/en/v6.5.4/notebooks/synthesis_imaging.html#Wide-Field-Imaging Wide-Field-Imaging] (CASAdocs) for a more detailed discussion. | ||

We can estimate whether our image requires W-projection by calculating the recommended number of w-planes using this formula taken from page 392 of the [https://ui.adsabs.harvard.edu/abs/1999ASPC..180..383P/abstract NRAO 'white book'], | We can estimate whether our image requires W-projection by calculating the recommended number of w-planes using this formula taken from page 392 of the [https://ui.adsabs.harvard.edu/abs/1999ASPC..180..383P/abstract NRAO 'white book'], | ||

| Line 199: | Line 204: | ||

</math> | </math> | ||

where I_FOV is the image field-of-view (32') and theta_syn is the synthesized beam size (1.0"). Working in units of arcseconds ((32 x 60) / 1.0) * ((32 x 60) / 206265) evaluates to | where I_FOV is the image field-of-view (32') and theta_syn is the synthesized beam size (1.0"). Working in units of arcseconds ((32 x 60) / 1.0) * ((32 x 60) / 206265) evaluates to N_wprojplanes ~ 18 so we will choose to turn on the w-project algorithm with ''gridder='widefield''' and set ''wprojplanes=18''. If the recommended number of planes had been <= 1 then we would not have needed to turn on the wide-field gridder, and we could have used ''gridder='standard' '' instead. | ||

We will now create a preliminary dirty image using these parameters. | We will now create a preliminary dirty image using these parameters. | ||

| Line 205: | Line 210: | ||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

tclean(vis='obj.ms',imagename='obj.dirty.9600pix', datacolumn='data', imsize=9600, cell='0.2arcsec', pblimit=-0.1, gridder='widefield', wprojplanes=18 ) | tclean(vis='obj.ms', imagename='obj.dirty.9600pix', datacolumn='data', imsize=9600, cell='0.2arcsec', pblimit=-0.1, gridder='widefield', wprojplanes=18) | ||

</source> | </source> | ||

*'' datacolumn='data' '': To image the visibilities in the measurement set's DATA column. | *'' datacolumn='data' '': To image the visibilities in the measurement set's DATA column. | ||

| Line 214: | Line 219: | ||

*''wprojplanes=18'': The number of w-planes to use for w-projection. | *''wprojplanes=18'': The number of w-planes to use for w-projection. | ||

While running | While running {{tclean_6.5.4}}, you may notice a warning message that looks like this: | ||

<pre style="background-color:lightgrey;"> | <pre style="background-color:lightgrey;"> | ||

task_tclean::SIImageStore::restore (file casa-source/code/synthesis/ImagerObjects/SIImageStore.cc, line 2245) Restoring with an empty image model. Only residuals will be processed to form the output restored image. | task_tclean::SIImageStore::restore (file casa-source/code/synthesis/ImagerObjects/SIImageStore.cc, line 2245) Restoring with an empty image model. Only residuals will be processed to form the output restored image. | ||

</pre> | </pre> | ||

This is expected when creating a dirty image. The model is blank because we haven't done any deconvolution in this | This is expected when creating a dirty image. The model is blank because we haven't done any deconvolution in this {{tclean_6.5.4}} execution (i.e., niter=0) and we haven't started with a preexisting model (e.g., by using the 'startmodel' parameter or by resuming a previous {{tclean_6.5.4}} execution). | ||

After | After {{tclean_6.5.4}} has finished, you should now open the dirty image in CARTA. On NRAO machines, open a new terminal tab, cd to the working directory, then type: | ||

<source lang="python"> | <source lang="python"> | ||

# in | # in terminal | ||

carta --no_browser | |||

</source> | </source> | ||

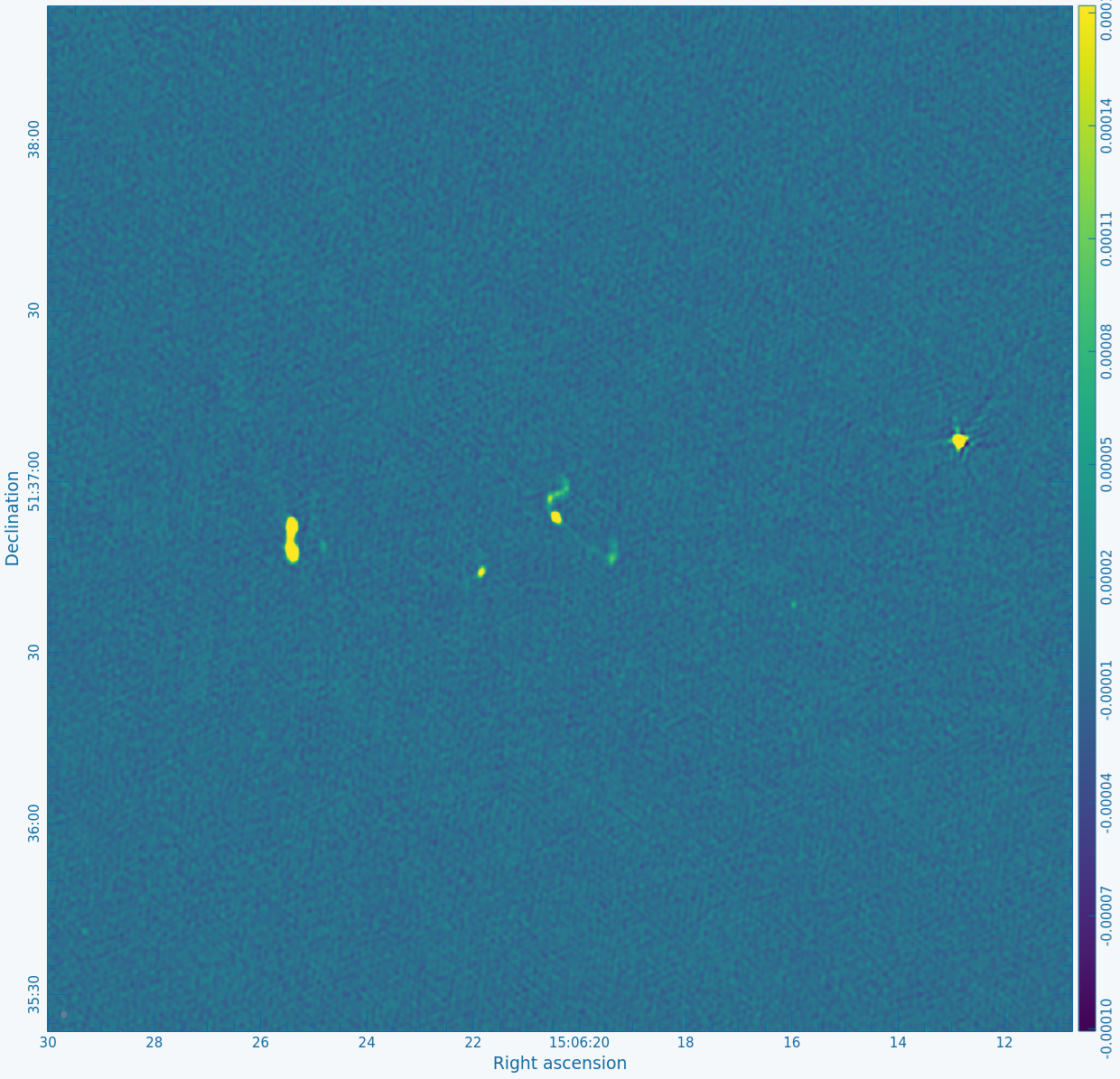

Figure 2A below shows the resulting dirty image, and Figure 2B shows a zoom-in of the central region of the image. | Copy the output URL into a browser to view your CARTA session. Select "obj.dirty.9600pix.image" to load. Figure 2A below shows the resulting dirty image, and Figure 2B shows a zoom-in of the central region of the image. | ||

Several outlying sources are detectable; the four brightest are marked with | Several outlying sources are detectable; the four brightest are marked with red circles. Use the red Min and Max sliders in the Render Configuration tool to adjust the image. Linear scaling with viridis color map is used in the below example. | ||

{| | {| | ||

|- valign="top" ! scope="row" | | |- valign="top" ! scope="row" | | ||

|| [[Image:MOO_1506+ | || [[Image:MOO_1506+5136_dirty_CASA6.4.1.png|400px|thumb|Figure 2A: The 32' dirty image. The locations of the four brightest far-field sources are marked with red circles.]] | ||

|| [[Image:MOO_1506+ | || [[Image:MOO_1506+5136_dirty_zoom_CASA6.4.1.png|400px|thumb|Figure 2B: The same image as in Figure 2A after zooming in on the central objects.]] | ||

|} | |} | ||

| Line 245: | Line 250: | ||

* Proceed with the self-calibration procedure using a small field-of-view that includes only the central sources, ignoring the outlying sources. | * Proceed with the self-calibration procedure using a small field-of-view that includes only the central sources, ignoring the outlying sources. | ||

In this guide, we will first choose to ignore the outlying sources in order to present a simplified self-calibration procedure. This is also what was chosen for the scientific image and analysis because the artifacts from the outlying sources did not strongly effect the area of scientific interest (inner 3'). For more information about the other options described above, see the [https://casaguides.nrao.edu/index.php?title=VLA_CASA_Imaging | In this guide, we will first choose to ignore the outlying sources in order to present a simplified self-calibration procedure. This is also what was chosen for the scientific image and analysis because the artifacts from the outlying sources did not strongly effect the area of scientific interest (inner 3'). For more information about the other options described above, see the [https://casaguides.nrao.edu/index.php?title=VLA_CASA_Imaging VLA Imaging CASAguide] and the {{tclean_6.5.4}} task documentation. Sometimes, more advanced techniques are used for outlying sources such as UV-subtraction, peeling or direction-dependent calibration, but these are outside the scope of this guide. | ||

=== Creating the Initial Model === | === Creating the Initial Model === | ||

| Line 253: | Line 258: | ||

'''Image field-of-view''': For this science case we are only interested in sources within ~1.5' radius of the cluster center. Since we have chosen to ignore the outlying sources at this stage, we will proceed with an image field-of-view of 3'. | '''Image field-of-view''': For this science case we are only interested in sources within ~1.5' radius of the cluster center. Since we have chosen to ignore the outlying sources at this stage, we will proceed with an image field-of-view of 3'. | ||

'''Wide-field effects''': We repeat the calculation of wprojplanes from the Initial Imaging section using our new field of view of 3'. This results in wprojplanes ~ 1 so we turn off the correction for non-coplanar baselines by setting '' gridder='standard' '' | '''Wide-field effects''': We repeat the calculation of wprojplanes from the Initial Imaging section using our new field of view of 3'. This results in wprojplanes ~ 1 so we turn off the correction for non-coplanar baselines by setting '' gridder='standard'.'' | ||

'''Wide-band imaging''': Our images will combine data from all spectral windows, spanning a frequency range of about 4.5-6.5 GHz (a fractional bandwidth of about 36%). Each source's amplitude may vary substantially over this frequency range, due to either the source's intrinsic spectral variation and/or the frequency dependence of the VLA's primary beam. To mitigate these errors during deconvolution we will use '' deconvolver='mtmfs' '' and ''nterms=2''. For further discussion of wide-band imaging, see | '''Wide-band imaging''': Our images will combine data from all spectral windows, spanning a frequency range of about 4.5-6.5 GHz (a fractional bandwidth of about 36%). Each source's amplitude may vary substantially over this frequency range, due to either the source's intrinsic spectral variation and/or the frequency dependence of the VLA's primary beam. To mitigate these errors during deconvolution we will use '' deconvolver='mtmfs' '' and ''nterms=2''. For further discussion of wide-band imaging, see [https://casadocs.readthedocs.io/en/v6.5.4/notebooks/synthesis_imaging.html#Wide-Band-Imaging Wide-Band-Imaging] (CASA docs) and the [http://casaguides.nrao.edu/index.php/VLA_CASA_Imaging VLA Imaging CASAguide]. | ||

''' | '''Image deconvolution''': We will need to deconvolve (clean) this image in order to produce a model of the field. We will want to control the cleaning depth and masking interactively, so we set ''interactive=True''. We also must choose the number of clean iterations with the ''niter'' parameter. A suggested starting value is ''niter=1000'' iterations, but this can be changed interactively after we start cleaning. | ||

''' | '''Imaging weights''': When constructing the initial model, especially when there are large image artifacts, it is recommended to use "robust" imaging weights. In CASA, this is enabled with '' weighting='briggs','' and then choosing a value for the ''robust'' parameter between -2 and +2. Values of robust near +2 (approximately natural weighting) often result in large positive PSF sidelobes while robust near -2 (approximately uniform weighting) often produce large negative PSF sidelobes. Since many types of image artifacts scale with PSF sidelobe levels, a reasonable compromise is often around ''robust=0''. | ||

'''Saving the model''': After deconvolution, there are a couple options for how to write the model back to the measurement set, controlled by the ''savemodel'' parameter. The default is ''none'' which will not save the model. | '''Saving the model''': After deconvolution, there are a couple options for how to write the model back to the measurement set, controlled by the ''savemodel'' parameter. The default is ''none'' which will not save the model. <u>It is essential that this default is changed or else the selfcal procedure will not work properly.</u> The option ''savemodel='virtual' ''will save a copy of the image to the SOURCE subtable of the measurement set to be used later for on-the-fly model visibility prediction. This option is sometimes recommended for very large data sets, but is not generally recommended. The other option, '' savemodel='modelcolumn' '' is the recommended setting and the one that we will use in this guide. This option will predict the model visibilities after cleaning and save the result to the MODEL_DATA column. | ||

Now we are ready to create our first clean image. This image will provide the starting model that is required by the calibration routines, and it will showcase why we need self-calibration for these data. | Now we are ready to create our first clean image. This image will provide the starting model that is required by the calibration routines, and it will showcase why we need self-calibration for these data. | ||

| Line 267: | Line 272: | ||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

tclean(vis='obj.ms',imagename='obj.prelim_clean.3arcmin', datacolumn='data', imsize=900, cell='0.2arcsec', pblimit=-0.1, gridder='standard', deconvolver='mtmfs', nterms=2, niter=1000, interactive=True, weighting='briggs', robust=0, savemodel='modelcolumn') | tclean(vis='obj.ms', imagename='obj.prelim_clean.3arcmin', datacolumn='data', imsize=900, cell='0.2arcsec', pblimit=-0.1, gridder='standard', deconvolver='mtmfs', nterms=2, niter=1000, interactive=True, weighting='briggs', robust=0, savemodel='modelcolumn') | ||

</source> | </source> | ||

*'' datacolumn='data' '': To image the visibilities in the measurement set's DATA column. | *'' datacolumn='data' '': To image the visibilities in the measurement set's DATA column. | ||

| Line 282: | Line 287: | ||

* ''savemodel='modelcolumn''': To enable writing the MODEL_DATA column to the MS after imaging. '''**important**''' | * ''savemodel='modelcolumn''': To enable writing the MODEL_DATA column to the MS after imaging. '''**important**''' | ||

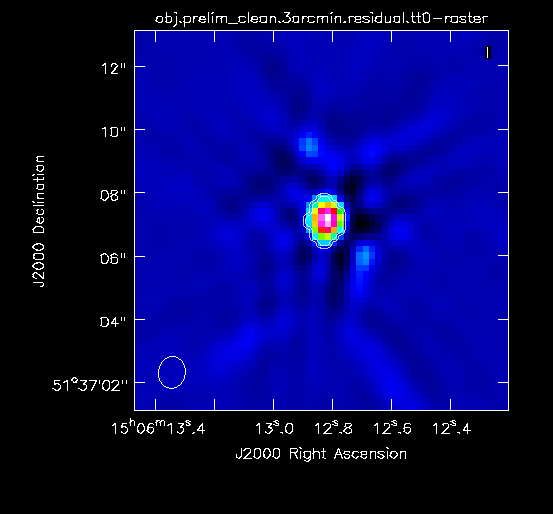

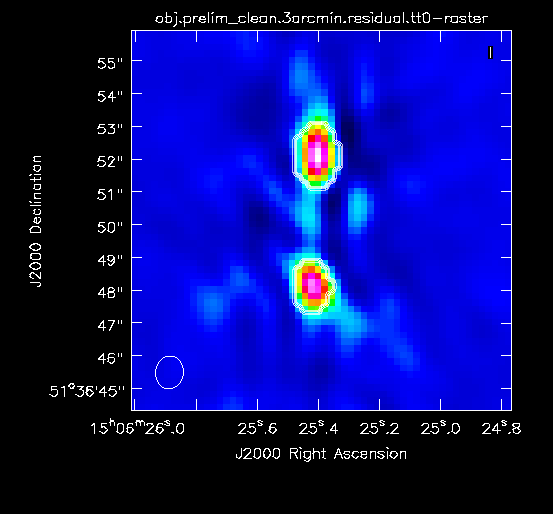

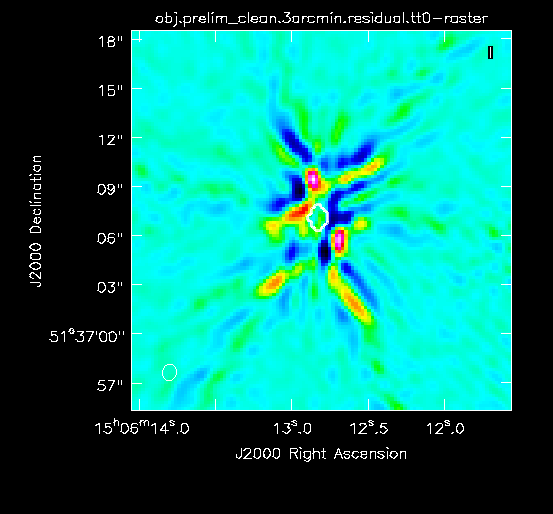

Interactive {{tclean_6.5.4}} will open the image in a CASA viewer window. Select All Channels and All Polarizations. Then, place conservative circular masks around each of the strong sources in turn, starting with the brightest: | |||

* First mask the rightmost source (Figure 3A), press the green circle arrow in the CASA viewer to perform one cycle of cleaning, and wait for focus to return to the viewer. The viewer will then show you the current residual image (i.e., the image after subtracting some flux from within the first mask). | * First mask the rightmost source (Figure 3A), press the green circle arrow in the CASA viewer to perform one cycle of cleaning, and wait for focus to return to the viewer. The viewer will then show you the current residual image (i.e., the image after subtracting some flux from within the first mask). | ||

* Then mask the leftmost double-lobed source (Figure 3B) and press the green circle arrow in the CASA viewer. This will perform the next cleaning cycle, after which focus will return to the viewer. Cleaning has now taken place inside the masks of both sources and the brightest source in the new residual image will be in the middle. | * Then mask the leftmost double-lobed source (Figure 3B) and press the green circle arrow in the CASA viewer. This will perform the next cleaning cycle, after which focus will return to the viewer. Cleaning has now taken place inside the masks of both sources and the brightest source in the new residual image will be in the middle. | ||

| Line 288: | Line 293: | ||

At this point, the residual emission is at about the same level as the artifacts so we stop cleaning (press the red X in the CASA viewer; see Figure 3D for an example of the artifacts). '''It is strongly recommended to only mask and clean emission that is believed to be real so as not to include artifacts in the model.''' <!--At this point we will have used a total of about 200 iterations. --> | At this point, the residual emission is at about the same level as the artifacts so we stop cleaning (press the red X in the CASA viewer; see Figure 3D for an example of the artifacts). '''It is strongly recommended to only mask and clean emission that is believed to be real so as not to include artifacts in the model.''' <!--At this point we will have used a total of about 200 iterations. --> | ||

<!-- | <!-- | ||

{| | {| | ||

|- valign="top" | |- valign="top" | ||

! scope="row" | [[Image:prelim_clean_1.png| | ! scope="row" | [[Image:prelim_clean_1.png|300px|thumb|right|Figure 3A: The mask for rightmost source.]] | ||

|| [[Image:prelim_clean_2.png| | || [[Image:prelim_clean_2.png|300px|thumb|right|Figure 3B: The mask for the left double-lobed source.]] | ||

|| [[Image:prelim_clean_3_source.png| | || [[Image:prelim_clean_3_source.png|300px|thumb|right|Figure 3C: The mask for the middle source.]] | ||

|| [[Image:prelim_clean_4.png| | || [[Image:prelim_clean_4.png|300px|thumb|right|Figure 3D: The rightmost source after 3 iterations of adding masks. The emission around the current mask is characteristic of artifacts.]] | ||

|| [[Image:prelim_clean.png| | || [[Image:prelim_clean.png|300px|thumb|right|Figure 4A: The preliminarily cleaned image.]] | ||

|} | |} | ||

--> | --> | ||

{| | {| | ||

|- valign="top" ! scope="row" | | |- valign="top" ! scope="row" | | ||

|| [[Image:prelim_clean_1.png| | || [[Image:prelim_clean_1.png|300px|thumb|right|Figure 3A: The mask for rightmost source.]] || [[Image:prelim_clean_2.png|300px|thumb|right|Figure 3B: The mask for the left double-lobed source.]] || [[Image:prelim_clean_3_source.png|300px|thumb|right|Figure 3C: The mask for the middle source.]] || [[Image:prelim_clean_4.png|300px|thumb|right|Figure 3D: The rightmost source after 3 iterations of adding masks. The emission around the current mask is characteristic of artifacts.]] | ||

|} | |} | ||

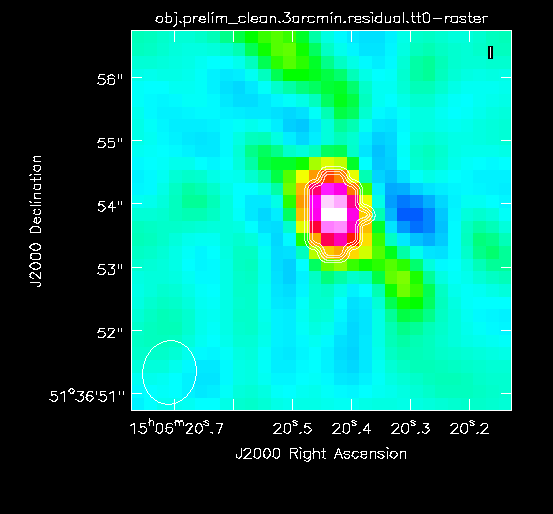

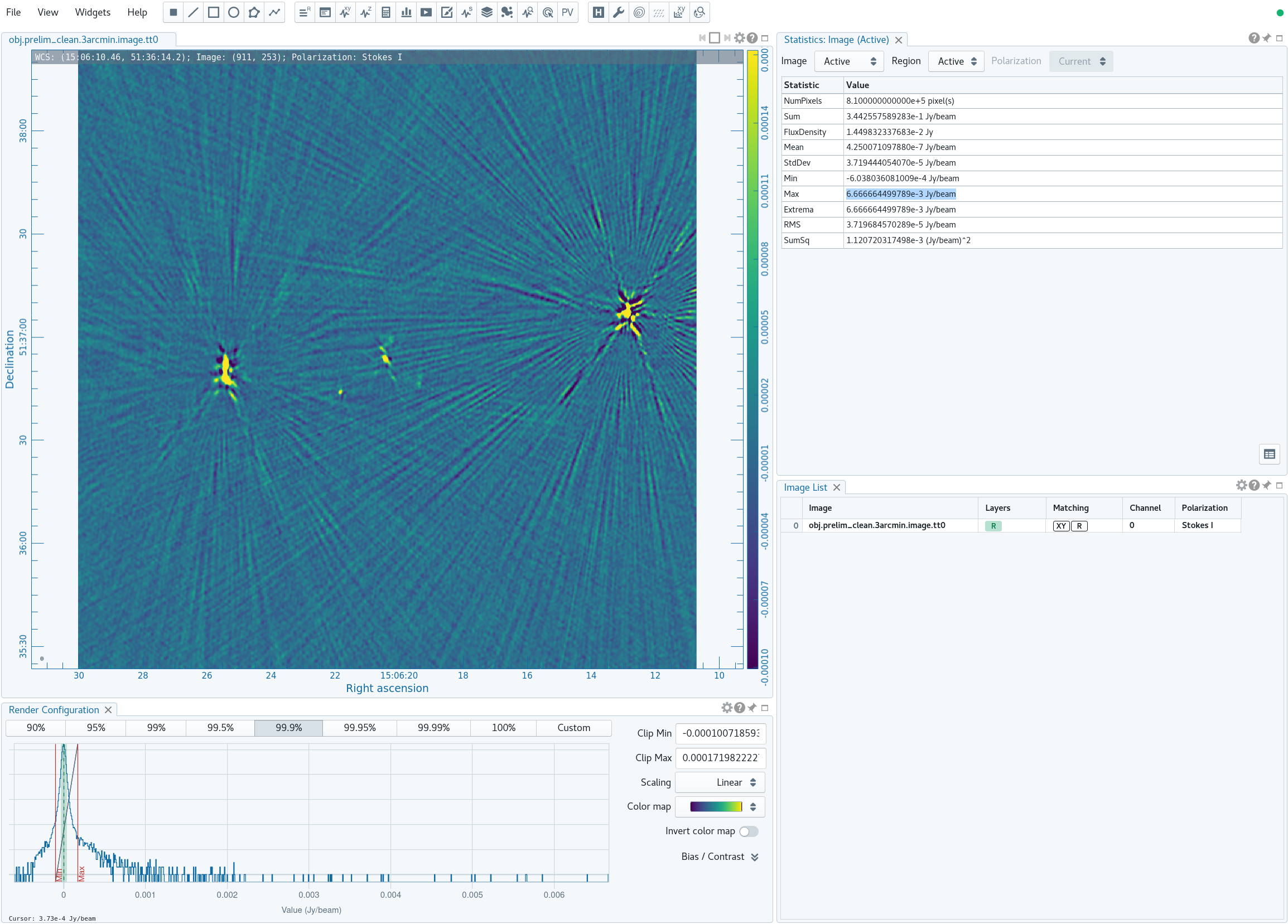

Now in CARTA we will examine the resulting clean image (obj.prelim_clean.3arcmin.image.tt0; see Figure 4A) that we will try to improve through the use of self-calibration. | |||

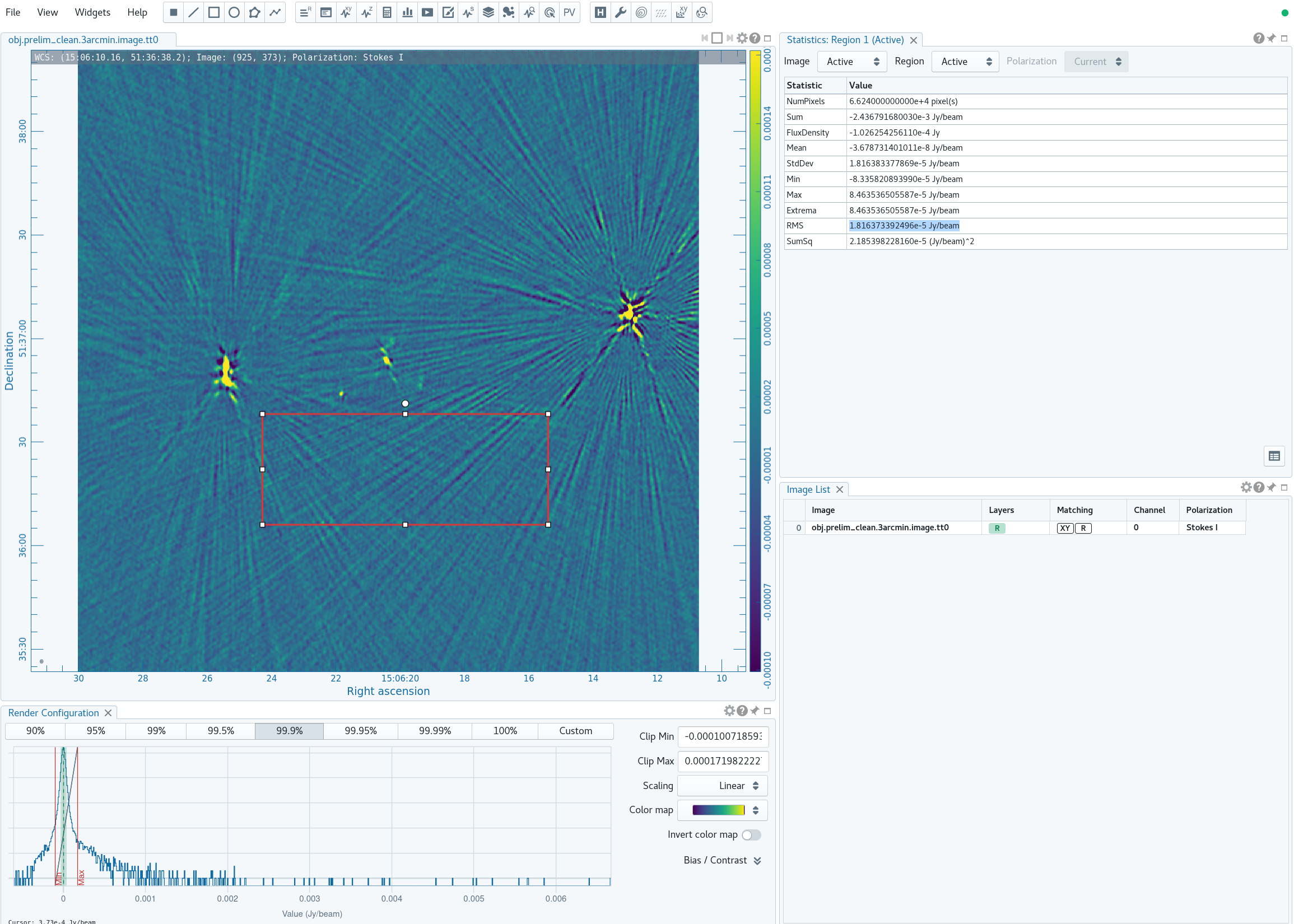

For reference, we will measure some simple image figures of merit to compare with the image after self-calibration. | For reference, we will measure some simple image figures of merit to compare with the image after self-calibration. Open the Statistics Widget (calculator symbol). | ||

Specifically, we measure the peak intensity ( | Specifically, we measure the peak intensity (Max) in the image to be 6.67 mJy. Now draw a large region that does not contain a source (see Figure 4B). The statistics widget displays the image noise (RMS) of the active region to be 18.2 uJy. This gives a ratio between the maximum and the noise of 366, which is called the dynamic range. | ||

{| | {| | ||

|- valign="top" ! scope="row" | | |- valign="top" ! scope="row" | | ||

|| [[Image: | || [[Image:MOO_1506+5136_prelim_clean_max_CASA6.4.1.png|500px|thumb|left|Figure 4A: The preliminarily cleaned image. The statistics widget displays the Max intensity of the entire image.]] | ||

|| [[Image:prelim_clean_max.png| | <!-- || [[Image:prelim_clean_max.png|400px|thumb|left|Figure 4B: Measuring the maximum value by drawing a rectangular region over the entire image and then looking at the statistics tab of the regions section. If the regions section is not visible, you can enable it by going to View -> Regions under the viewer's main menu bar.]] --> | ||

|| [[Image: | || [[Image:MOO_1506+5136_prelim_clean_rms_CASA6.4.1.png|500px|thumb|left|Figure 4B: Measuring the source-free RMS by drawing a rectangular region near the source of interest and large enough to measure unbiased statistics (i.e., many synthesized beams), but avoiding any obvious real sources of emission.]] | ||

|} | |} | ||

=== Verifying the Initial Model === | === Verifying the Initial Model === | ||

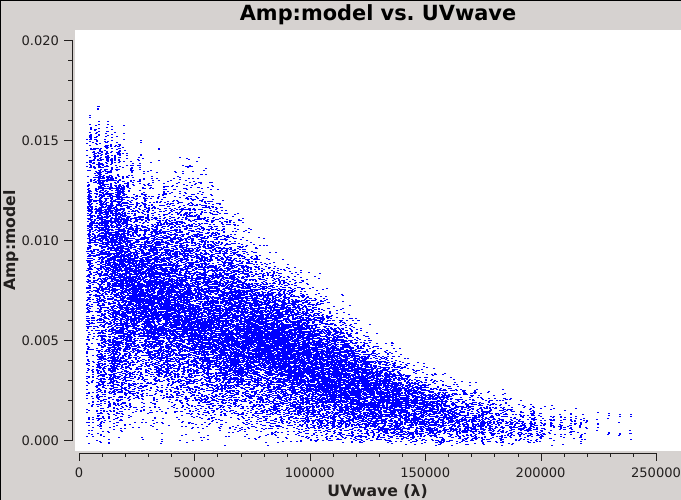

There have been reported instances where CASA fails to save the model visibilities when using interactive clean. It is crucial that the model is saved correctly, otherwise self-calibration will use the 'default' model of a 1 Jy point source at the phase center. The default model may be very different from your target field and we do not want to carry out the | There have been reported instances where CASA fails to save the model visibilities when using interactive clean. It is crucial that the model is saved correctly, otherwise self-calibration will use the 'default' model of a 1 Jy point source at the phase center. The default model may be very different from your target field and we do not want to carry out the self-cal procedure with this incorrect model. Therefore, it is recommended to verify that the model has been saved correctly by inspecting the model visibilities. | ||

<source lang="python"> | <source lang="python"> | ||

| Line 321: | Line 329: | ||

plotms(vis='obj.ms', xaxis='UVwave', yaxis='amp', ydatacolumn='model', avgchannel='64', avgtime='300') | plotms(vis='obj.ms', xaxis='UVwave', yaxis='amp', ydatacolumn='model', avgchannel='64', avgtime='300') | ||

</source> | </source> | ||

[[Image:Moo_model_uvwave.png| | [[Image:Moo_model_uvwave.png|300px|thumb|right| Figure 5: The model visibilities.]] | ||

* '' vis='obj.ms' '': To plot visibilities from the | * '' vis='obj.ms' '': To plot visibilities from the {{split_6.5.4}} MS. | ||

* '' xaxis='UVwave', yaxis='amp' '': To set UV-distance in wavelengths as the x-axis and amplitude as the y-axis of the plot. | * '' xaxis='UVwave', yaxis='amp' '': To set UV-distance in wavelengths as the x-axis and amplitude as the y-axis of the plot. | ||

* '' ydatacolumn='model' '': To plot the model visibilities (from the MODEL_DATA column). | * '' ydatacolumn='model' '': To plot the model visibilities (from the MODEL_DATA column). | ||

| Line 328: | Line 336: | ||

* '' avgtime='300' '': To average in time in chunks of 300 seconds. | * '' avgtime='300' '': To average in time in chunks of 300 seconds. | ||

The resulting plot should resemble Figure 5 on the right. This plot shows that some baselines see up to 15 mJy of flux, but that the source becomes resolved on the longer baselines. Note that the visibilities plotted here are for correlations RR and LL since we dropped RL and LR with the | The resulting plot should resemble Figure 5 on the right. This plot shows that some baselines see up to 15 mJy of flux, but that the source becomes resolved on the longer baselines. Note that the visibilities plotted here are for correlations RR and LL since we dropped RL and LR with the {{split_6.5.4}} task. However, had we retained RL and LR, they would equal zero since we only made a Stokes I model image. | ||

This model that has been plotted is clearly not the default model of a 1 Jy point source (if it was, all amplitudes would be at 1 Jy) and so we have verified that {{tclean_6.5.4}} has correctly written the MODEL_DATA column of the MS. | |||

== First Round of Self-Calibration == | == First Round of Self-Calibration == | ||

| Line 337: | Line 344: | ||

=== Solving for the First Self-Calibration Table === | === Solving for the First Self-Calibration Table === | ||

For this first round of selfcal we will use the model that we just created above and compare it to the data in order to create a table of corrections to apply to the data. We are now ready to solve for these first selfcal solutions. | For this first round of selfcal we will use the model that we just created above and compare it to the data in order to create a table of corrections to apply to the data. We are now ready to solve for these first selfcal solutions. | ||

We will explore various parameters of the task | We will explore various parameters of the task {{gaincal_6.5.4}} in order to learn more about the data and settle on the optimal parameters. | ||

The most relevant parameters are discussed below: | The most relevant parameters are discussed below: | ||

'''Solution interval''': This is controlled with the ''solint'' parameter and is one of the most fundamental parameters for self-calibration. The value of this parameter can vary between '' 'int' '' which stands for integration and will be the time of a single integration for that data set (corresponding to 3 seconds for this data set) up to '' 'inf' '' for infinite (meaning either an entire scan or the entire observation, depending on the value of the ''combine'' parameter). We typically want to choose the shortest solution interval for which we can achieve adequate signal-to-noise in the calibration solutions. | '''Solution interval''': This is controlled with the ''solint'' parameter and is one of the most fundamental parameters for self-calibration. The value of this parameter can vary between '' 'int' '' which stands for integration and will be the time of a single integration for that data set (corresponding to 3 seconds for this data set) up to '' 'inf' '' for infinite (meaning either an entire scan or the entire observation, depending on the value of the ''combine'' parameter). We typically want to choose the shortest solution interval for which we can achieve adequate signal-to-noise in the calibration solutions. | ||

'''Data combination''': The data can be combined in multiple ways to improve signal-to-noise, but if the target source is bright enough to obtain good calibration solutions in a short timescale without data combination then these options are not necessary. However if low signal-to-noise messages appear across antennas, times, and SPWs then both parallel-hand correlations, if present, can be combined by setting '' gaintype='T' '' instead of '' gaintype='G' '' and this will generally increase the signal-to-noise by an additional factor of root 2. If | '''Data combination''': The data can be combined in multiple ways to improve signal-to-noise, but if the target source is bright enough to obtain good calibration solutions in a short timescale without data combination, then these options are not necessary. However, if low signal-to-noise messages appear across antennas, times, and SPWs, then both parallel-hand correlations, if present, can be combined by setting '' gaintype='T' '' instead of '' gaintype='G' '', and this will generally increase the signal-to-noise by an additional factor of root 2. If {{gaincal_6.5.4}} still produces a lot of low signal-to-noise messages, one can try to combine multiple SPWs with '' combine='spw' '' if the SPWs are at similar frequencies, and can generally expect to increase the solution's signal-to-noise by the square root of the number of SPWs that are combined. Combining scans during self-calibration is not usually recommended. | ||

'''Amplitude and phase correction''': Because large phase errors will result in incoherent averaging and lead to lower amplitudes, we always want to start with phase-only self-calibration. We achieve this by setting '' calmode='p' ''. In later rounds of selfcal, after the phases have been well corrected, we can try '' calmode='ap' '' to include an amplitude component in the solutions. When solving for amplitudes, we may also want to consider normalizing them with the ''solnorm'' parameter. | '''Amplitude and phase correction''': Because large phase errors will result in incoherent averaging and lead to lower amplitudes, we always want to start with phase-only self-calibration. We achieve this by setting '' calmode='p' ''. In later rounds of selfcal, after the phases have been well corrected, we can try '' calmode='ap' '' to include an amplitude component in the solutions. When solving for amplitudes, we may also want to consider normalizing them with the ''solnorm'' parameter. | ||

'''Reference antenna''': As with standard calibration, we want to choose a reference antenna for the calibration solutions. It is generally recommended to choose one that is near the center of the array but not heavily flagged. In order to determine which one to use, use | '''Reference antenna''': As with standard calibration, we want to choose a reference antenna for the calibration solutions. It is generally recommended to choose one that is near the center of the array but not heavily flagged. In order to determine which one to use, use {{plotants_6.5.4}} to plot the positions of the antennas and choose one near the center. To find the percent data flagged per antenna, you could run {{flagdata_6.5.4}} with ''mode='summary'.'' | ||

'''Signal-to-noise ratio (SNR)''': The default minimum SNR in | '''Signal-to-noise ratio (SNR)''': The default minimum SNR in {{gaincal_6.5.4}} is 3.0, but this can be adjusted with the ''minsnr'' parameter. Solutions below this minimum are flagged in the output calibration table. Sometimes we want to increase this minimum, e.g., to 5.0, to reject noisy solutions. Alternatively, we may want to lower this minimum, e.g., to zero, usually for inspection purposes. | ||

We will now create our initial self-calibration table. This will not be the final table for the first round of self-calibration, but rather, a temporary table that we will inspect to help determine the optimal parameters. | We will now create our initial self-calibration table. This will not be the final table for the first round of self-calibration, but rather, a temporary table that we will inspect to help determine the optimal parameters. | ||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

gaincal(vis='obj.ms',caltable='selfcal_initial.tb',solint='int',refant='ea24',calmode='p',gaintype='G',minsnr=0) | gaincal(vis='obj.ms', caltable='selfcal_initial.tb', solint='int', refant='ea24', calmode='p', gaintype='G', minsnr=0) | ||

</source> | </source> | ||

*''caltable='selfcal_initial.tb': | *''caltable='selfcal_initial.tb':'' Name the calibration tables something intuitive to distinguish each one. | ||

*''solint='int':'' We choose a solution interval equal to the integration time (3 seconds) in order to get a sense of the structure and timescale of the variations. | *''solint='int':'' We choose a solution interval equal to the integration time (3 seconds) in order to get a sense of the structure and timescale of the variations. | ||

*''refant='ea24':'' The chosen reference antenna. | *''refant='ea24':'' The chosen reference antenna. | ||

| Line 362: | Line 369: | ||

*''minsnr=0'': To turn off flagging of low-SNR solutions, so that we can inspect all the solutions. | *''minsnr=0'': To turn off flagging of low-SNR solutions, so that we can inspect all the solutions. | ||

You may see several messages printed to the terminal while | You may see several messages printed to the terminal while {{gaincal_6.5.4}} is running, e.g., | ||

<pre style="background-color:lightgrey;"> | <pre style="background-color:lightgrey;"> | ||

| Line 370: | Line 377: | ||

This means that all the input data was flagged for this solution interval. This is generally harmless unless there are far fewer solutions in the output table than you were expecting. | This means that all the input data was flagged for this solution interval. This is generally harmless unless there are far fewer solutions in the output table than you were expecting. | ||

It is recommended to check the logger messages written by | It is recommended to check the logger messages written by {{gaincal_6.5.4}} to find the total number of solution intervals, i.e., | ||

<pre style="background-color:#fffacd;"> | <pre style="background-color:#fffacd;"> | ||

| Line 379: | Line 386: | ||

</pre> | </pre> | ||

This shows that | This shows that {{gaincal_6.5.4}} successfully found solutions for most of the solution intervals. | ||

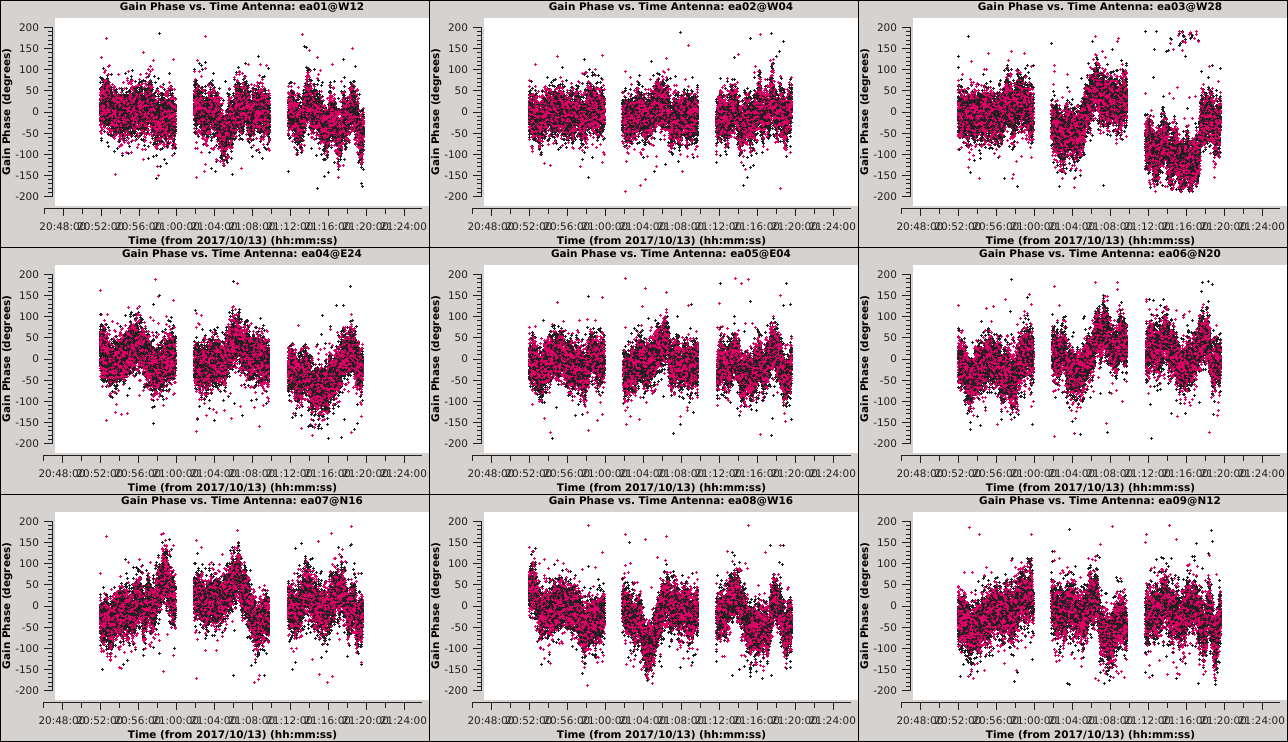

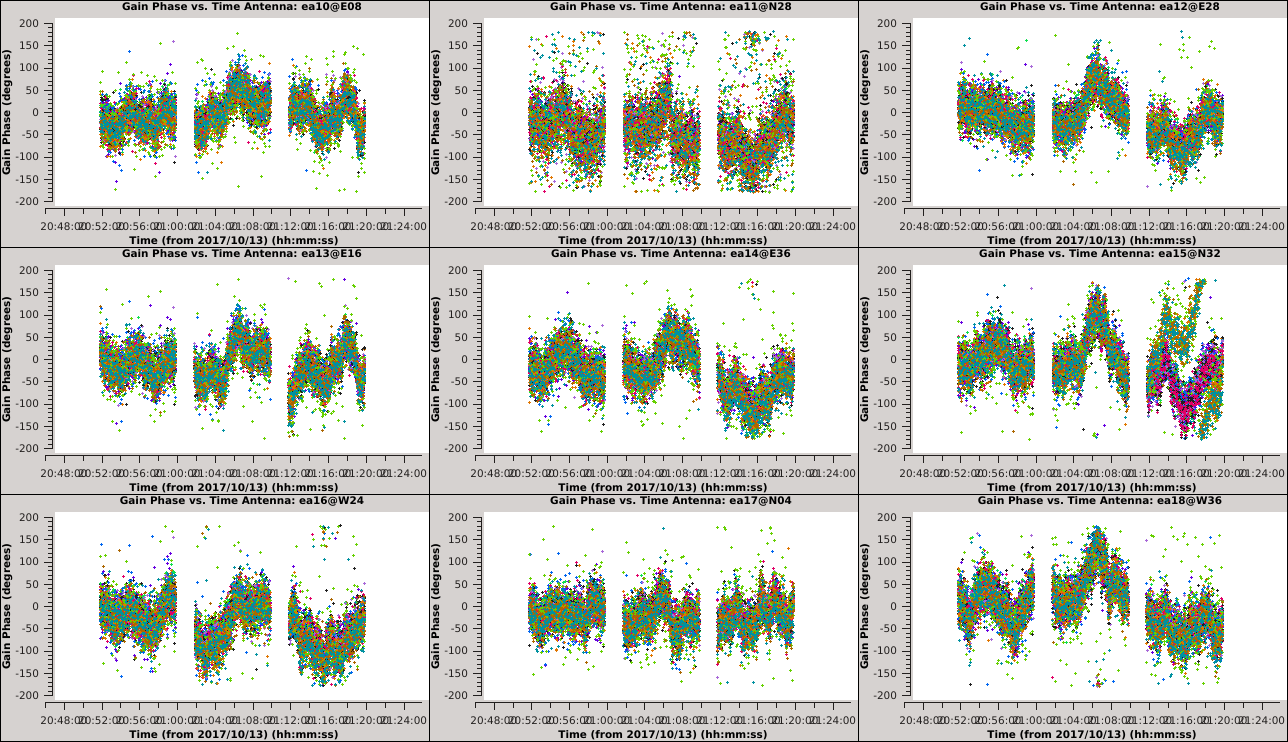

=== Plotting the First Self-Calibration Table === | === Plotting the First Self-Calibration Table === | ||

To view these solutions, we use | To view these solutions, we use {{plotms_6.5.4}}. | ||

[[Image:Selfcal_initial_plotms1.png| | [[Image:Selfcal_initial_plotms1.png|300px|thumb|right|Figure 6: The phase solutions vs. time for the first 9 antennas, colored by polarization.]] | ||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

plotms(vis='selfcal_initial.tb',xaxis='time',yaxis='phase',iteraxis='antenna',gridrows=3, gridcols=3, coloraxis='corr') | plotms(vis='selfcal_initial.tb', xaxis='time', yaxis='phase', iteraxis='antenna', gridrows=3, gridcols=3, coloraxis='corr') | ||

</source> | </source> | ||

*''xaxis='time' & yaxis='phase' '': View the phase variations over time with respect to antenna 24. | *''xaxis='time' & yaxis='phase' '': View the phase variations over time with respect to antenna 24. | ||

| Line 405: | Line 412: | ||

''' ''It is apparent from these plots that we can combine polarizations to improve the solution signal-to-noise ratio, since we observed that the solutions for the two polarizations were very similar. '' ''' | ''' ''It is apparent from these plots that we can combine polarizations to improve the solution signal-to-noise ratio, since we observed that the solutions for the two polarizations were very similar. '' ''' | ||

The next thing we want to understand is if we can combine SPWs, and if so, which ones. We can plot the previous solutions in a slightly different way to help answer this question. We will view these solutions again using | The next thing we want to understand is if we can combine SPWs, and if so, which ones. We can plot the previous solutions in a slightly different way to help answer this question. We will view these solutions again using {{plotms_6.5.4}}, but this time we will color the solutions by SPW. | ||

[[Image:Selfcal_initial_plotms2.png| | [[Image:Selfcal_initial_plotms2.png|300px|thumb|right|Figure 7: The phase solutions vs. time, colored by spectral window, second iteration.]] | ||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

plotms(vis='selfcal_initial.tb',xaxis='time',yaxis='phase',iteraxis='antenna',gridrows=3, gridcols=3, coloraxis='spw') | plotms(vis='selfcal_initial.tb', xaxis='time', yaxis='phase', iteraxis='antenna', gridrows=3, gridcols=3, coloraxis='spw') | ||

</source> | </source> | ||

<!-- | |||

*''xaxis='time' & yaxis='phase' '': View the phase variations over time with respect to antenna 24. | *''xaxis='time' & yaxis='phase' '': View the phase variations over time with respect to antenna 24. | ||

*''iteraxis='antenna' '': Create separate plots of the corrections for each antenna. | *''iteraxis='antenna' '': Create separate plots of the corrections for each antenna. | ||

*''gridrows=3 & gridcols=3'': It can be helpful to view multiple plots at once, as we will be stepping through several plots. In this case, | *''gridrows=3 & gridcols=3'': It can be helpful to view multiple plots at once, as we will be stepping through several plots. In this case, 9 plots per page. | ||

*''coloraxis='spw' '': To use different colors when plotting different SPWs. | *''coloraxis='spw' '': To use different colors when plotting different SPWs. | ||

--> | |||

Iterate again through these plots using the 'Next Iteration' button (green triangle) to inspect the solutions for all antennas. When you get to ea15 it should be clear that the solutions are not the same for all SPWs. This is also true but less obvious for ea27 due to the limited number of colors available to | Iterate again through these plots using the 'Next Iteration' button (green triangle) to inspect the solutions for all antennas. When you get to ea15, it should be clear that the solutions are not the same for all SPWs. This is also true but less obvious for ea27 due to the limited number of colors available to {{plotms_6.5.4}}. | ||

We can inspect this further in the following plot: | We can inspect this further in the following plot: | ||

[[Image:Selfcal_initial_plotms3.png| | [[Image:Selfcal_initial_plotms3.png|300px|thumb|right|Figure 8: The phase solutions vs. time for antenna ea15, colored by scan, second iteration.]] | ||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

plotms(vis='selfcal_initial.tb',xaxis='time',yaxis='phase',antenna='ea15',iteraxis='spw',gridrows=3, gridcols=3, coloraxis='scan') | plotms(vis='selfcal_initial.tb', xaxis='time', yaxis='phase', antenna='ea15', iteraxis='spw', gridrows=3, gridcols=3, coloraxis='scan') | ||

</source> | </source> | ||

*''xaxis='time' & yaxis='phase' '': View the phase variations over time with respect to antenna 24. | <!-- *''xaxis='time' & yaxis='phase' '': View the phase variations over time with respect to antenna 24. --> | ||

*''antenna='ea15' '': To select only antenna ea15. | *''antenna='ea15' '': To select only antenna ea15. | ||

*''iteraxis='spw' '': Create separate plots of the corrections for each SPW. | *''iteraxis='spw' '': Create separate plots of the corrections for each SPW. | ||

*''gridrows=3 & gridcols=3'': To view multiple plots at once. In this case, 9 plots per page. | <!-- *''gridrows=3 & gridcols=3'': To view multiple plots at once. In this case, 9 plots per page. --> | ||

*''coloraxis='scan' '': To use different colors when plotting different scans. | *''coloraxis='scan' '': To use different colors when plotting different scans. | ||

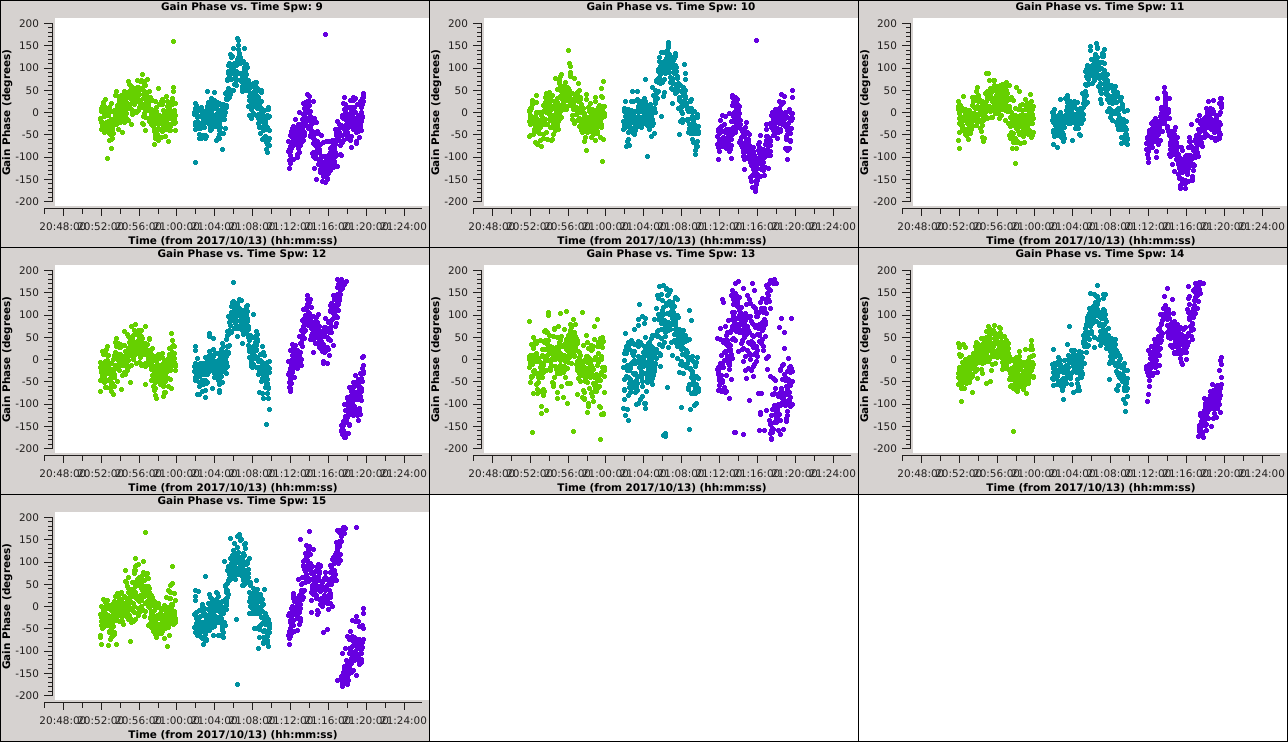

The first set of 9 plots should have a similar pattern. On the next iteration, this pattern should continue for SPWs 9~11, but then change for SPWs 12~15. The signal-to-noise for SPW 13 is also noticeably lower. If we create these plots for ea27 we will see a similar pattern, only this time the pattern is constant over SPWs 0~5 and then | The first set of 9 plots should have a similar pattern. On the next iteration, this pattern should continue for SPWs 9~11, but then change for SPWs 12~15. The signal-to-noise for SPW 13 is also noticeably lower. If we create these plots for ea27, we will see a similar pattern, only this time the pattern is constant over SPWs 0~5 and then changes to a new pattern that is constant for SPWs 6~15. For both ea15 and ea27, the change only happens in the third of the three scans. | ||

''' ''Unfortunately, since all SPWs do not show the same phase solutions, it will not be trivial to combine them to increase the signal-to-noise ratio of the solutions. Therefore, we will continue without combining SPWs.'' ''' <!--, but in Appendix ... we show a more complicated example of how to handle this situation. --> | ''' ''Unfortunately, since all SPWs do not show the same phase solutions, it will not be trivial to combine them to increase the signal-to-noise ratio of the solutions. Therefore, we will continue without combining SPWs.'' ''' <!--, but in Appendix ... we show a more complicated example of how to handle this situation. --> | ||

| Line 436: | Line 445: | ||

=== Examples of Various Solution Intervals === | === Examples of Various Solution Intervals === | ||

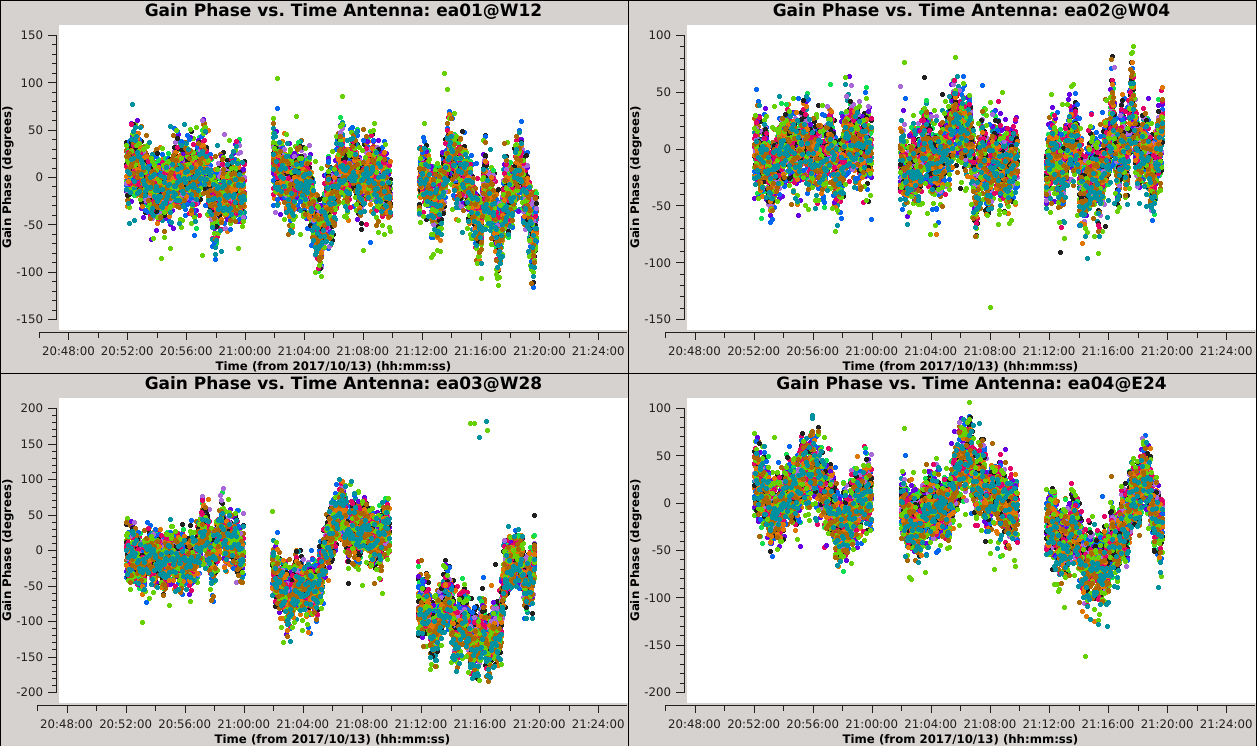

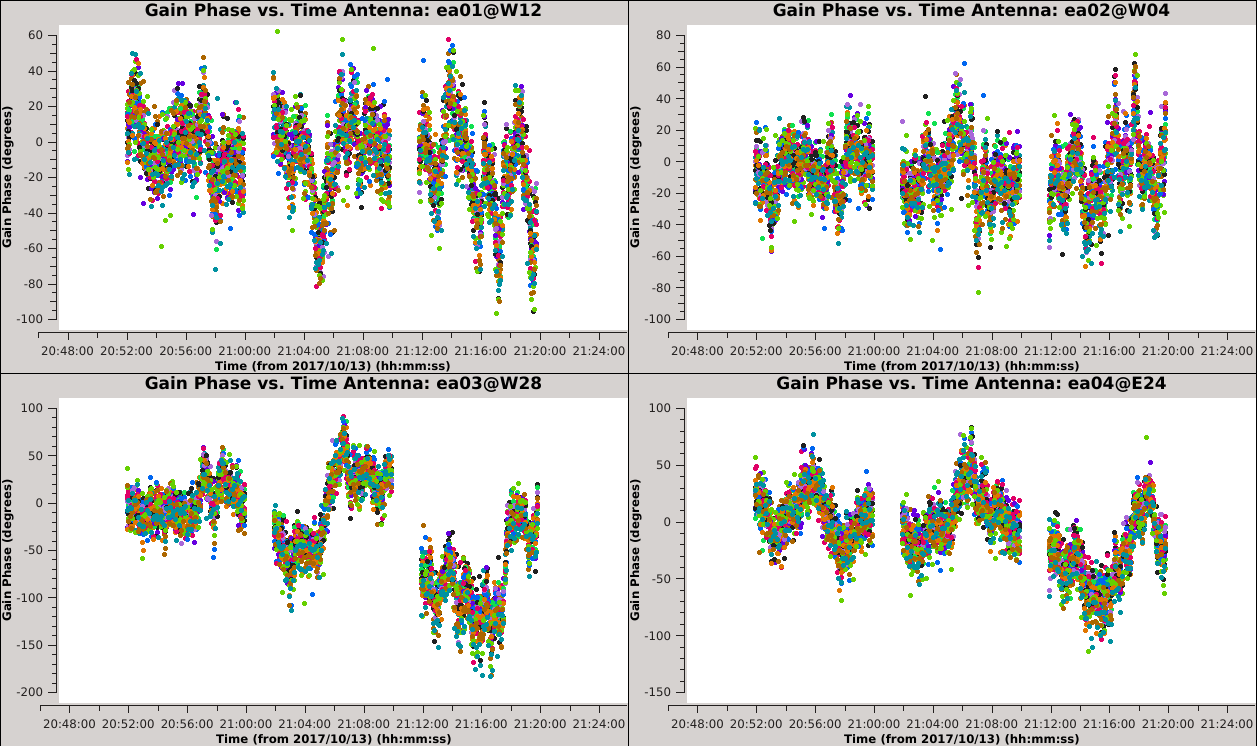

Now that we have made the decision about how to handle SPW combination, we will move on to consider time averaging. We observed the previous solutions to display large, coherent phase changes but also to have significant scatter due to low signal-to-noise. We could increase the signal-to-noise by root 2 for each doubling of the solution interval, but it does not make sense to average over timescales larger than the characteristic time over which the phase remains constant (approximately 20 seconds for these data). In this section, we will demonstrate these effects by creating and plotting tables over a range of solution intervals. We will also combine both polarizations (with gaintype='T' ) to improve the solution signal-to-noise ratio, since we observed the two polarizations to measure approximately the same phase changes. | Now that we have made the decision about how to handle SPW combination, we will move on to consider time averaging. We observed the previous solutions to display large, coherent phase changes, but also to have significant scatter due to low signal-to-noise. We could increase the signal-to-noise by root 2 for each doubling of the solution interval, but it does not make sense to average over timescales larger than the characteristic time over which the phase remains constant (approximately 20 seconds for these data). In this section, we will demonstrate these effects by creating and plotting tables over a range of solution intervals. We will also combine both polarizations (with gaintype='T' ) to improve the solution signal-to-noise ratio, since we observed the two polarizations to measure approximately the same phase changes. | ||

These commands will create 6 new tables having solution intervals of 3, 6, 12, 24, 48 and 96 seconds (1, 2, 4, 8, 16 and 32 times the data's integration time) | These commands will create 6 new tables having solution intervals of 3, 6, 12, 24, 48 and 96 seconds (1, 2, 4, 8, 16 and 32 times the data's integration time). The commands can all be entered together and will take a while to run. | ||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

| Line 449: | Line 458: | ||

</source> | </source> | ||

These commands will plot each of the newly created tables. Run the commands sequentially and use the | These commands will plot each of the newly created tables. Run the commands sequentially (or simply change the selected table in Data > Browse, then click Plot to update) and use the {{plotms_6.5.4}} GUI to iterate through the plots of additional antennas. | ||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

| Line 463: | Line 472: | ||

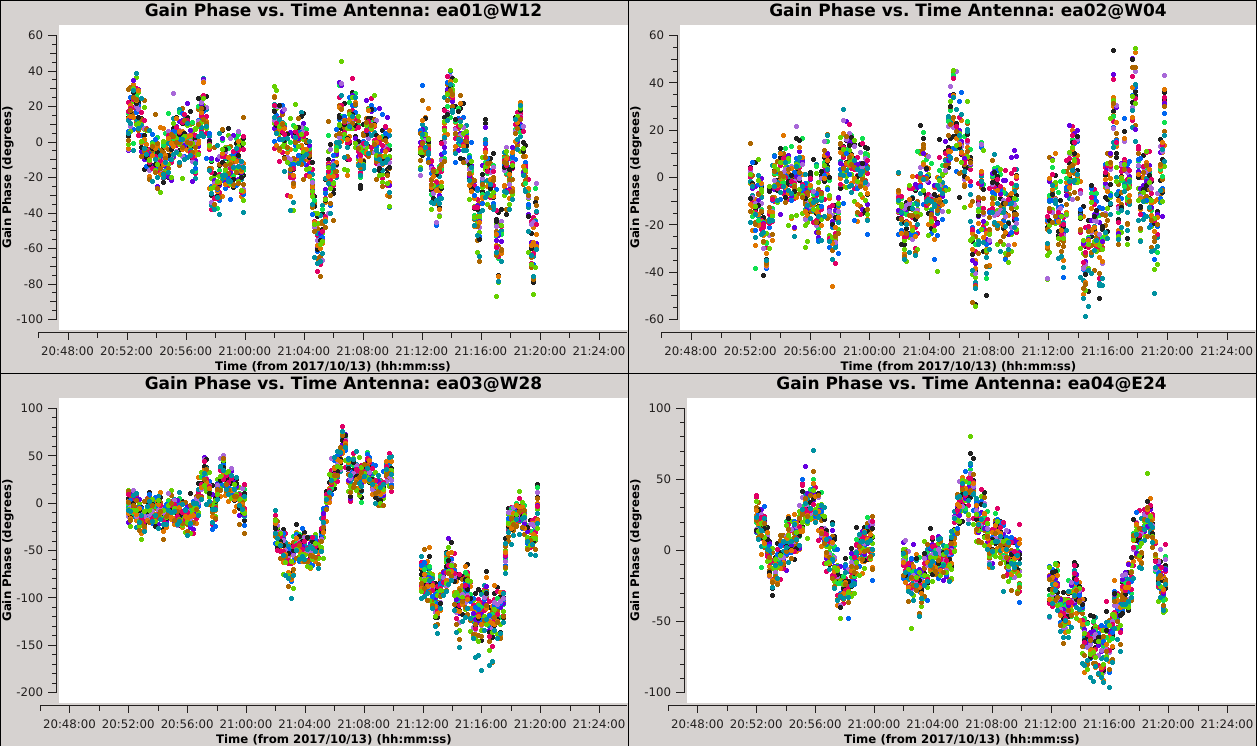

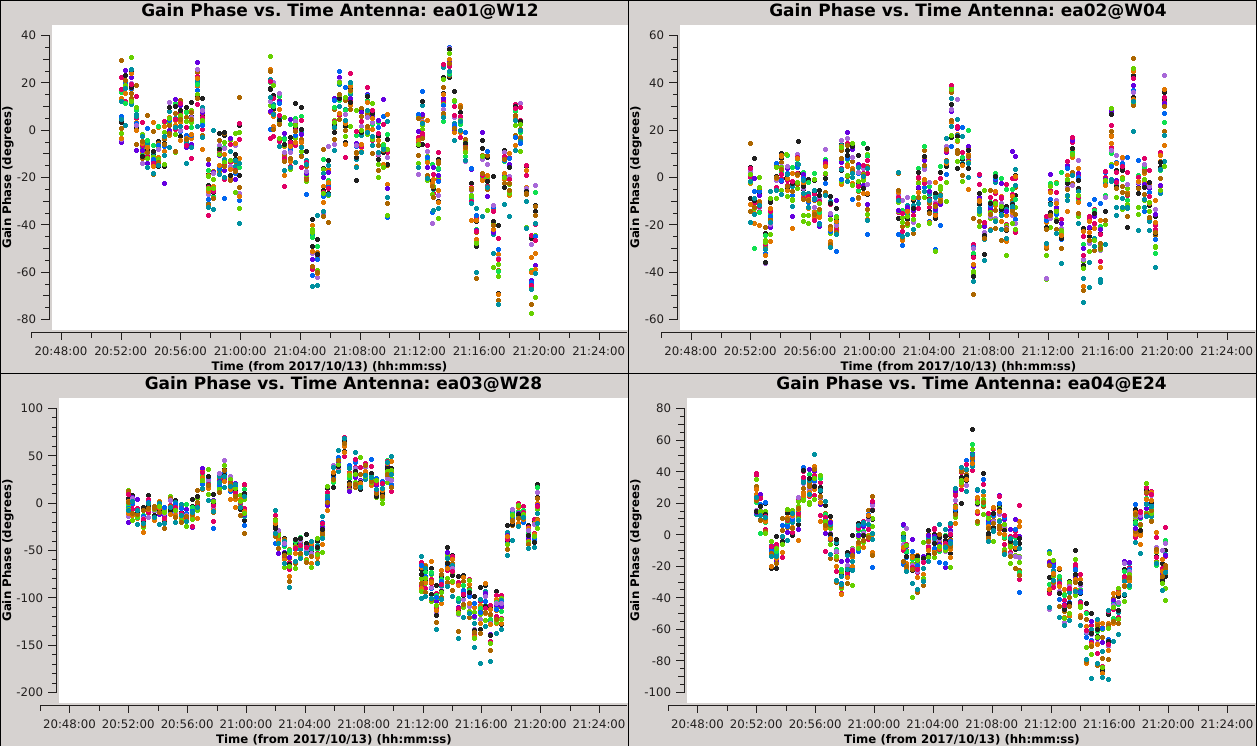

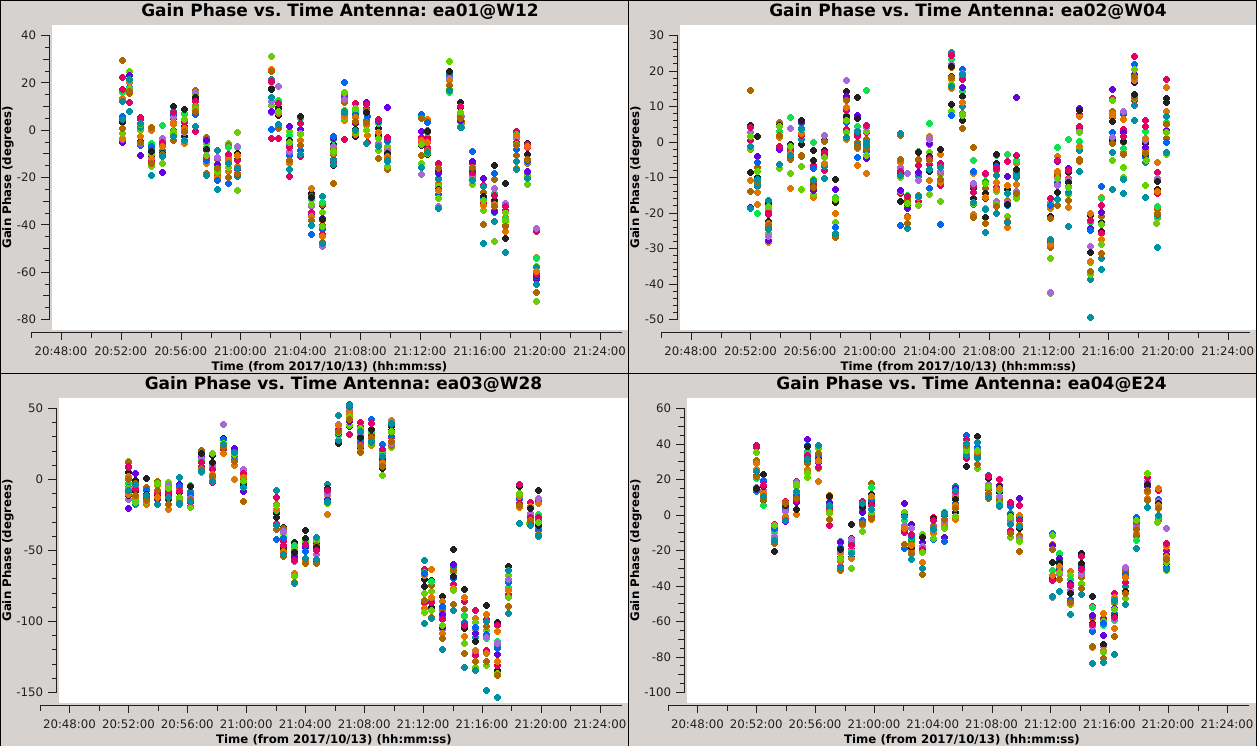

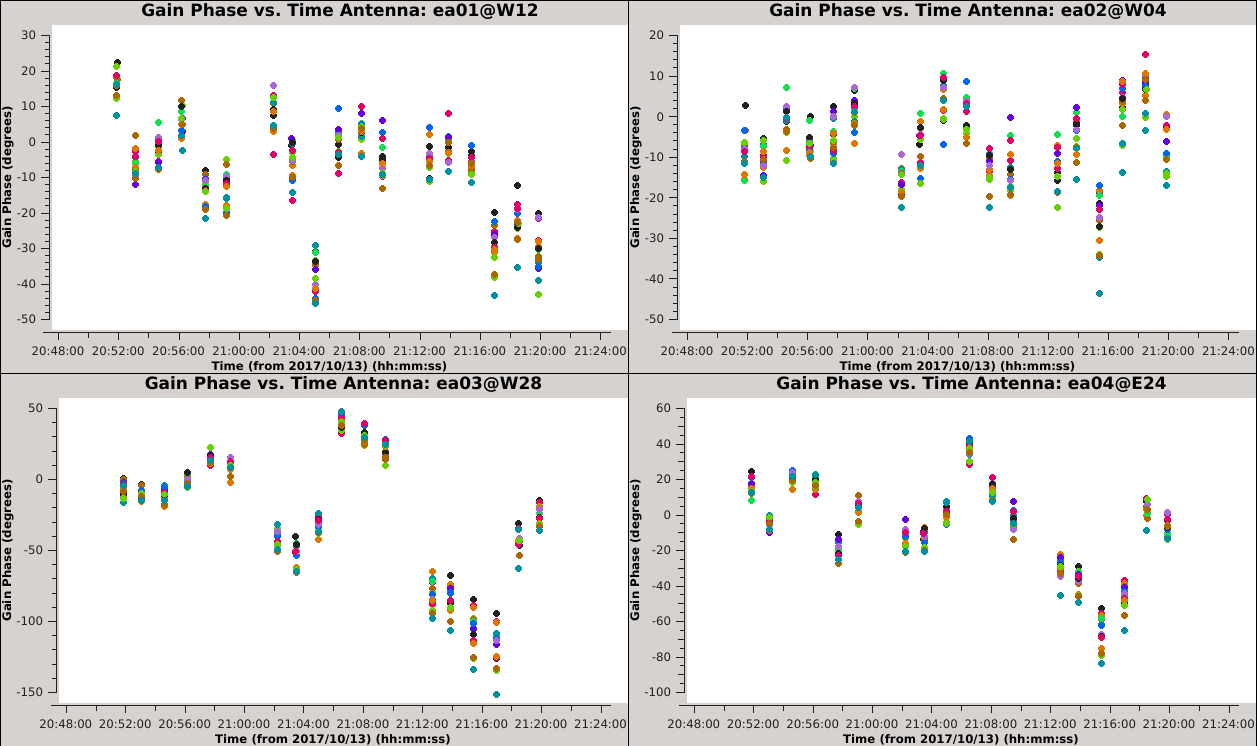

{| | {| | ||

|- valign="top" ! scope="row" | | |- valign="top" ! scope="row" | | ||

|| [[Image:Selfcal_solint_3s.png| | || [[Image:Selfcal_solint_3s.png|300px|thumb|right|Figure 9A: The phase solutions vs. time of the solint=3s table, first four antennas, colored by SPW.]] | ||

|| [[Image:Selfcal_solint_6s.png| | || [[Image:Selfcal_solint_6s.png|300px|thumb|right|Figure 9B: The phase solutions vs. time of the solint=6s table, first four antennas, colored by SPW.]] | ||

|| [[Image:Selfcal_solint_12s.png| | || [[Image:Selfcal_solint_12s.png|300px|thumb|right|Figure 9C: The phase solutions vs. time of the solint=12s table, first four antennas, colored by SPW.]] | ||

|- | |- | ||

|| [[Image:Selfcal_solint_24s.png| | || [[Image:Selfcal_solint_24s.png|300px|thumb|right|Figure 9D: The phase solutions vs. time of the solint=24s table, first four antennas, colored by SPW.]] | ||

|| [[Image:Selfcal_solint_48s.png| | || [[Image:Selfcal_solint_48s.png|300px|thumb|right|Figure 9E: The phase solutions vs. time of the solint=48s table, first four antennas, colored by SPW.]] | ||

|| [[Image:Selfcal_solint_96s.png| | || [[Image:Selfcal_solint_96s.png|300px|thumb|right|Figure 9F: The phase solutions vs. time of the solint=96s table, first four antennas, colored by SPW.]] | ||

|} | |} | ||

=== Comparing the Solution Intervals === | === Comparing the Solution Intervals === | ||

We can see from plotting these solutions that the shortest timescale solutions capture the structure of the phase variations, but with a large dispersion. If we were to apply these low signal-to-noise ratio (SNR) solutions then we would, on average, be correcting for the large phase changes but we would also introduce random phase errors that could reduce the sensitivity of our observation. Another concern with using low-SNR solutions is that they can overfit the noise in the visibilities, leading to biases in the self-calibrated image. In this guide we adopt a conservative minimum SNR of 6 in order to guard against these biases. | We can see from plotting these solutions that the shortest timescale solutions capture the structure of the phase variations, but with a large dispersion. If we were to apply these low signal-to-noise ratio (SNR) solutions then we would, on average, be correcting for the large phase changes, but we would also introduce random phase errors that could reduce the sensitivity of our observation. Another concern with using low-SNR solutions is that they can overfit the noise in the visibilities, leading to biases in the self-calibrated image. In this guide we adopt a conservative minimum SNR of 6 in order to guard against these biases. | ||

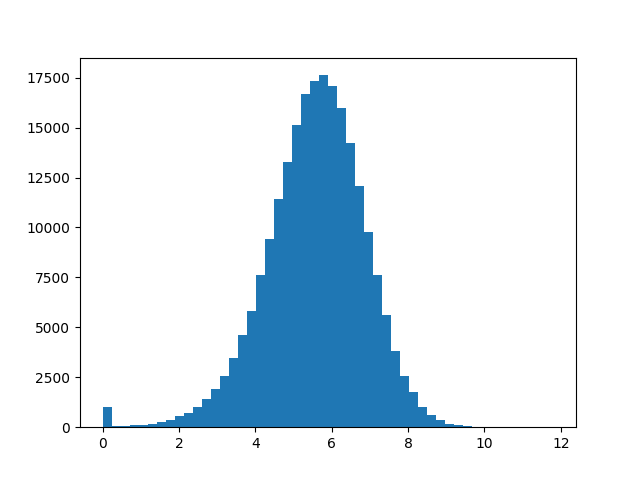

Let's take a closer look at the SNR of the table with the 3 second integration time. We will use the table toolkit ('''tb''') to extract the SNR of each solution which will return the SNR values as a '''numpy ndarray''' (and the '''numpy ravel''' method will flatten the result into a 1-dimensional array). Then we use numpy and scipy to print some statistical quantities and matplotlib to make a histogram. | Let's take a closer look at the SNR of the table with the 3 second integration time. We will use the table toolkit ('''tb''') to extract the SNR of each solution which will return the SNR values as a '''numpy ndarray''' (and the '''numpy ravel''' method will flatten the result into a 1-dimensional array). Then we use numpy and scipy to print some statistical quantities and matplotlib to make a histogram. | ||

[[Image:Selfcal_3s_SNR_hist_620.png| | [[Image:Selfcal_3s_SNR_hist_620.png|300px|thumb|right|Figure 10: Distribution of signal-to-noise ratios for the selfcal table with solint = 3s.]] | ||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

| Line 494: | Line 503: | ||

</source> | </source> | ||

We can see from the output that the median SNR is about 5.6 for this table and that enforcing our desired minimum SNR of 6 would flag 62% of the solutions. We want to avoid flagging such a high fraction of solutions | We can see from the output that the median SNR is about 5.6 for this table and that enforcing our desired minimum SNR of 6 would flag 62% of the solutions. We want to avoid flagging such a high fraction of solutions, so we need to consider longer solution intervals to raise the SNR. | ||

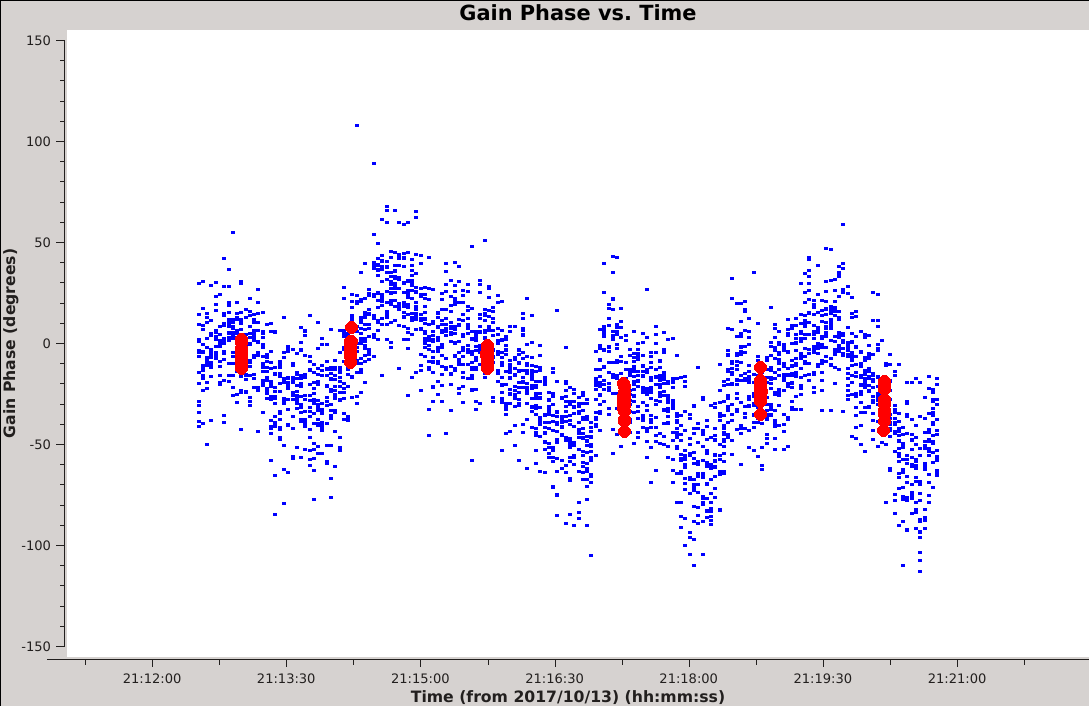

[[Image:Selfcal_compare_3s_96s.png| | [[Image:Selfcal_compare_3s_96s.png|300px|thumb|right|Figure 11: The phase solutions vs. time of the solint=3s table (blue) and 96s table (red), for antenna ea01, scan 7, all SPWs.]] | ||

The longest solution interval in our examples (96 seconds) has a different problem. Specifically, we can see that the intrinsic phase is varying faster than the solution interval, so the solutions no longer do a good job of capturing the changes that we are trying to correct for. Applying such long timescale solutions may lead to some improvement in the image, but we would be leaving residual phase errors in the corrected data. This is particularly obvious if we overplot the solutions using {{plotms_6.5.4}}. The figure to the right shows one such example, created with the following two commands: | |||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

plotms('selfcal_combine_pol_solint_3.tb',antenna='ea01',scan='7',yaxis='phase') | plotms('selfcal_combine_pol_solint_3.tb', antenna='ea01', scan='7', yaxis='phase') | ||

plotms('selfcal_combine_pol_solint_96.tb',antenna='ea01',scan='7',yaxis='phase',plotindex=1, | plotms('selfcal_combine_pol_solint_96.tb', antenna='ea01', scan='7', yaxis='phase', plotindex=1, | ||

clearplots=False,customsymbol=True,symbolsize=12,symbolcolor='ff0000',symbolshape='circle') | clearplots=False, customsymbol=True, symbolsize=12, symbolcolor='ff0000', symbolshape='circle') | ||

</source> | </source> | ||

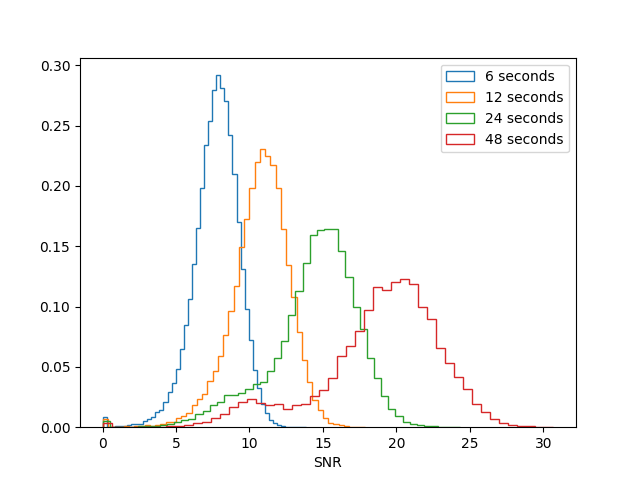

''' ''Given these considerations, we suggest that the optimal selfcal parameters will use the shortest possible interval for which the signal-to-noise is also sufficient.'' ''' Having identified shortcomings with the shortest (3s) and longest (96s) solution intervals in our set of example tables, we will now take a closer look at the SNR of the intermediate tables. The following code will compare the SNR histograms and compute the fraction of solutions less than a SNR of 6. | ''' ''Given these considerations, we suggest that the optimal selfcal parameters will use the shortest possible interval for which the signal-to-noise is also sufficient.'' ''' Having identified shortcomings with the shortest (3s) and longest (96s) solution intervals in our set of example tables, we will now take a closer look at the SNR of the intermediate tables. The following code will compare the SNR histograms and compute the fraction of solutions less than a SNR of 6. You may need to close the previous SNR plot (Figure 10) for the new plot to open correctly. | ||

[[Image:Selfcal_snr_comparison_620.png| | [[Image:Selfcal_snr_comparison_620.png|300px|thumb|right|Figure 12: The SNR distribution for several example selfcal tables.]] | ||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

| Line 548: | Line 557: | ||

<source lang="python"> | <source lang="python"> | ||

# in CASA | # in CASA | ||

gaincal(vis='obj.ms',caltable='selfcal_combine_pol_solint_12_minsnr_6.tb',solint='12s',refant='ea24',calmode='p',gaintype='T', minsnr=6) | gaincal(vis='obj.ms', caltable='selfcal_combine_pol_solint_12_minsnr_6.tb', solint='12s', refant='ea24', calmode='p', gaintype='T', minsnr=6) | ||

</source> | </source> | ||